Getting Started

This Getting Started Guide helps new users to install, start and work with INCEpTION. It gives a quick overview (estimated time for reading only: approx. 20-30 minutes) on the key functionalities in order to get familiar with the tool. It excludes special cases and details due to simplicity and focuses on the first steps. See our documentation for further reading on any topic. You are already in the User Guide document. The main documentation of this User Guide starts right after the Getting Started section: Core Functionalities.

For quick overviews, also see our tutorial videos e.g. covering an Introduction, an Overview, Recommender Basics and Entity Linking. Getting Started will refer to them wherever it might be helpful.

| Boxes: In Getting Started, these boxes provide additional information. They may be skipped for fast reading if background knowledge exists. Also, they may be consulted later on for a quick look-up on basic concepts. |

After the Introduction, Getting Started leads you to using INCEpTION in three steps:

-

We will see how to install it in Installing and starting INCEpTION.

-

In Project Settings and Structure of an Annotation Project, for a basic orientation and understanding the structure of a project will be explained.

-

You will be guided to make your first annotations in First Annotations with INCEpTION.

Introduction

What can INCEpTION be used for?

For a first impression on what INCEpTION is, you may want to watch our introduction video.

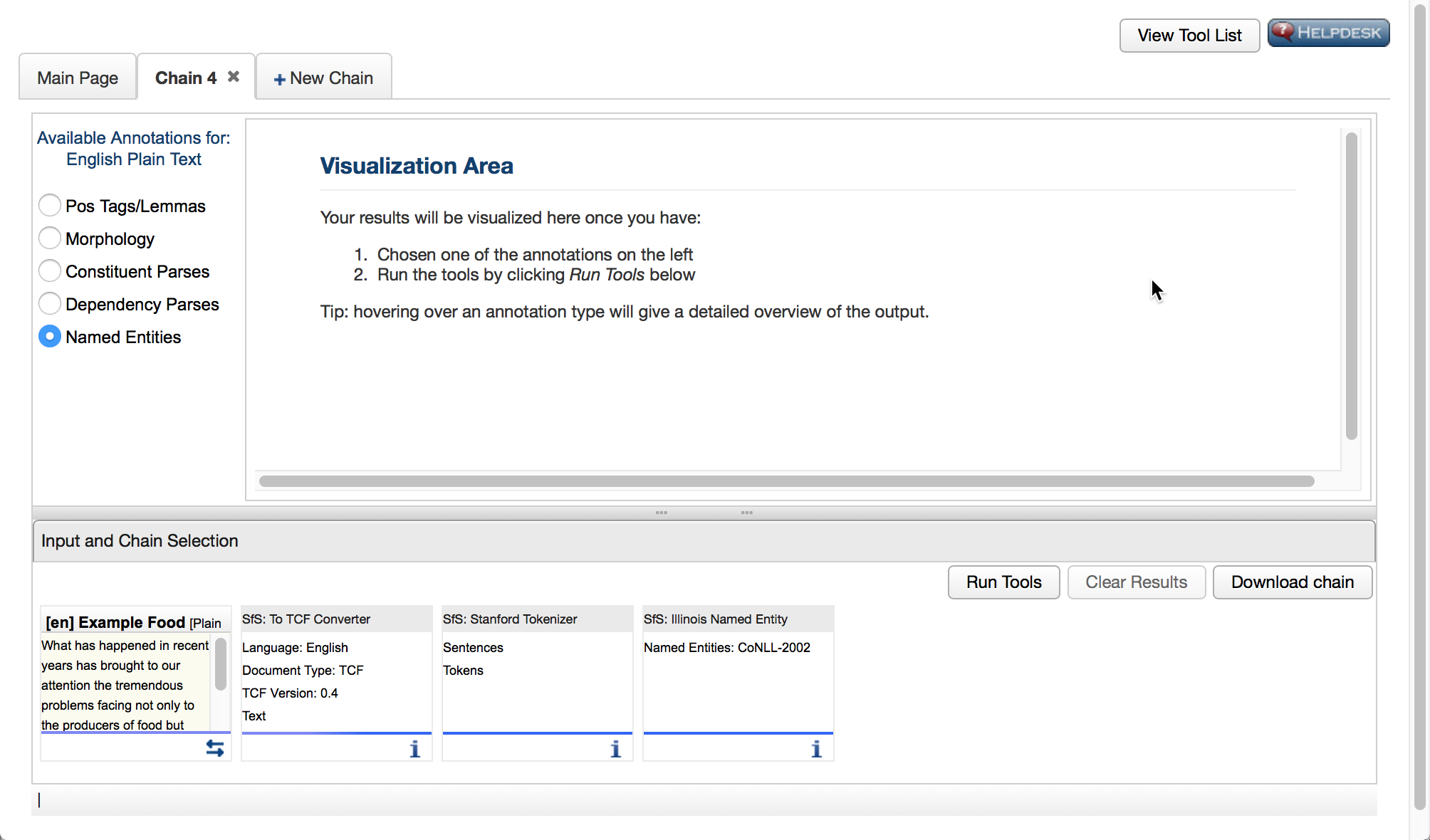

INCEpTION is a text-annotation environment useful for various kinds of annotation tasks on written text. Annotations are usually used for linguistic and/or machine learning concerns. INCEpTION is a web application in which several users can work on the same annotation project and it can contain several annotation projects at a time. It provides a recommender system to help you create annotations faster and easier. Beyond annotating, you can also create a corpus by searching an external document repository and adding documents. Moreover, you can use knowledge bases, e.g. for tasks like entity linking.

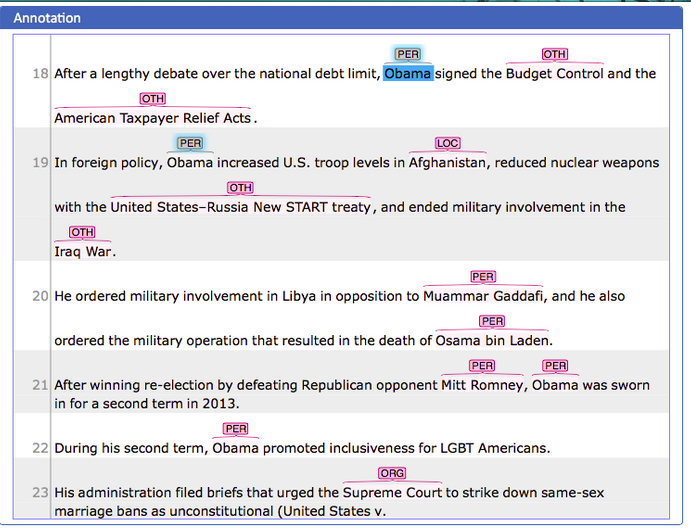

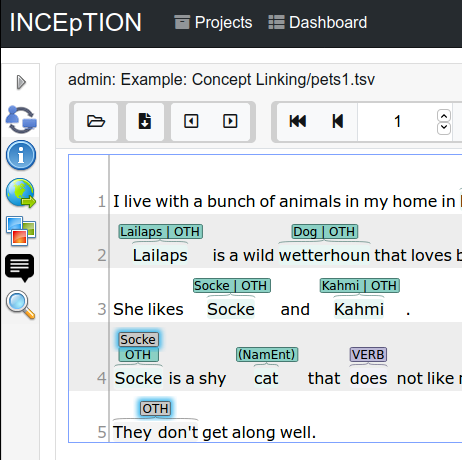

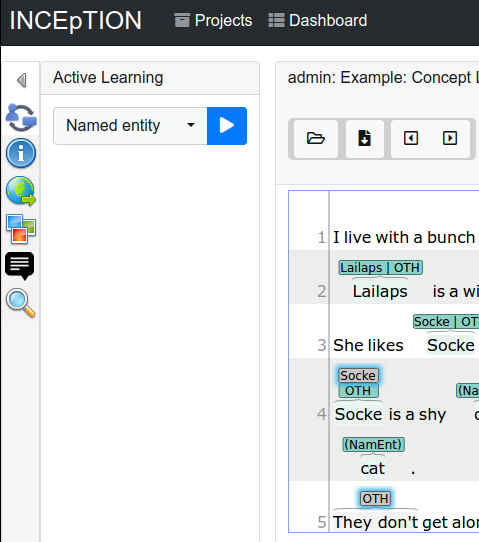

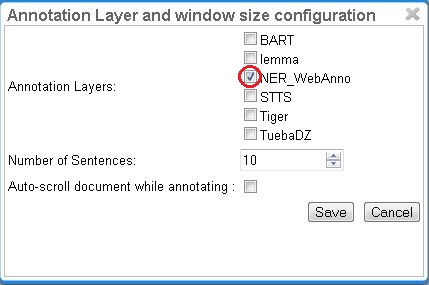

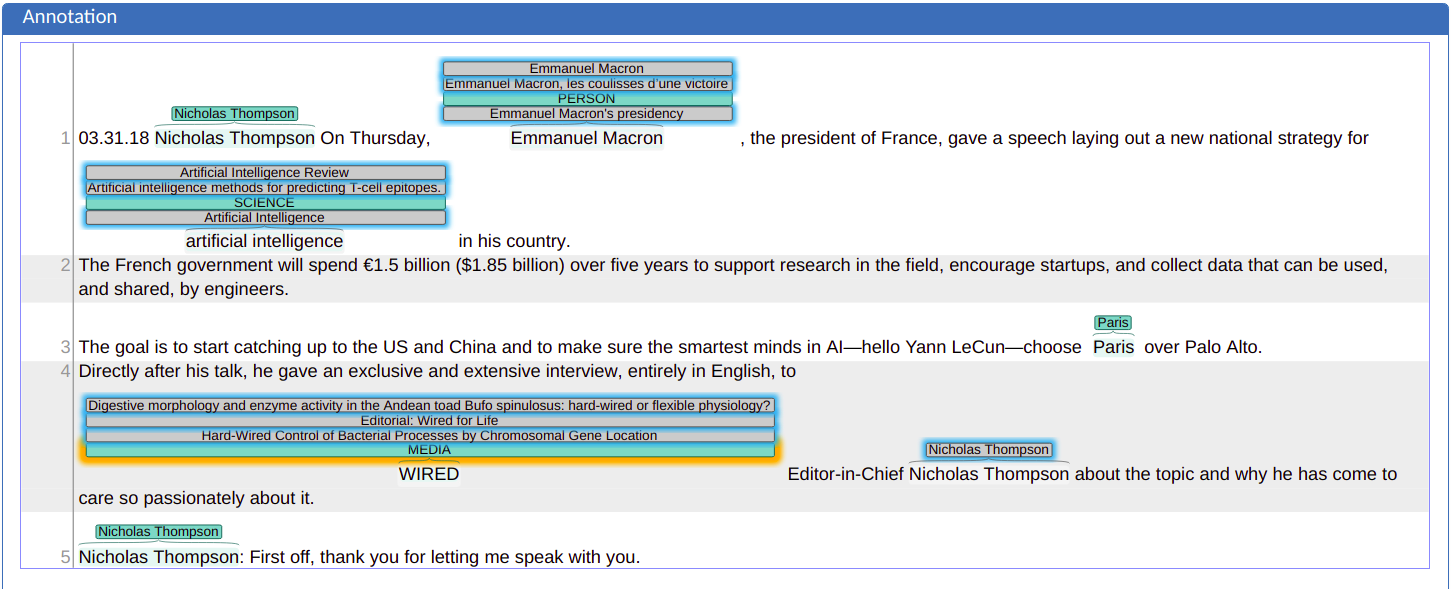

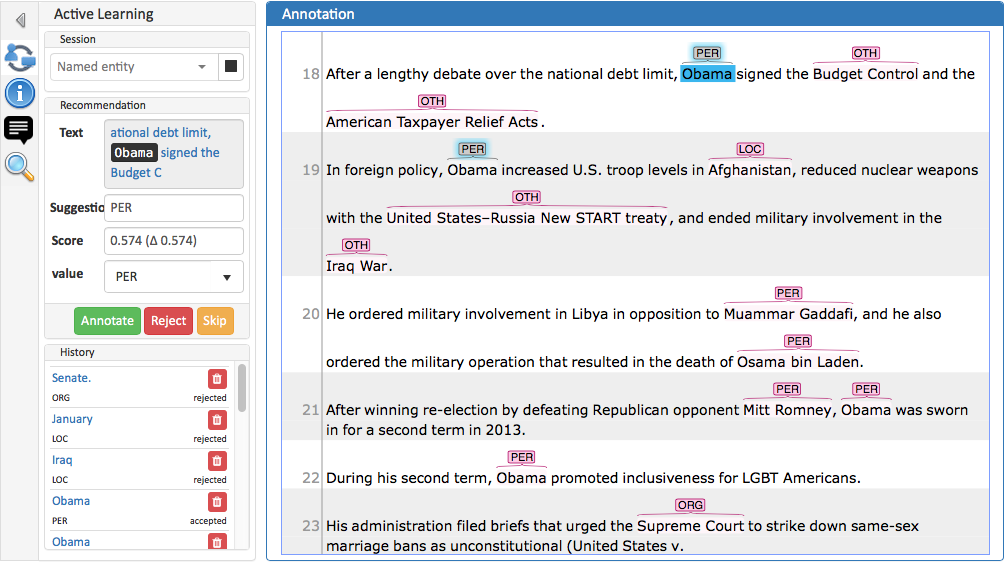

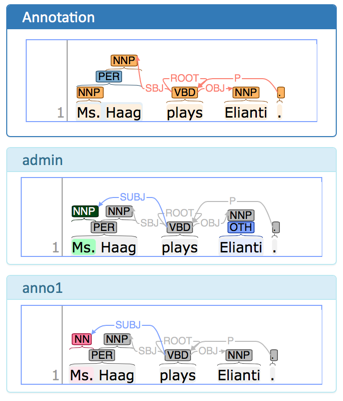

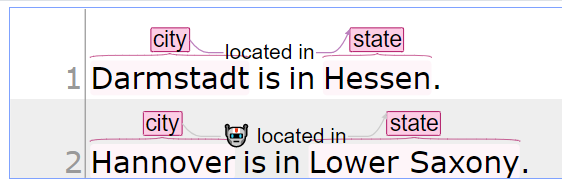

The following picture gives you a first impression on how annotated texts look like. In this example, text spans have been annotated as whether they refer to a person (PER), location (LOC), organization (ORG) or any other (OTH).

INCEpTION’s key features are: First, before you annotate, you need a corpus to be annotated (Corpus Creation).

You might have one already and import it or create it in INCEpTION.

Second, you might want to annotate the corpus (Annotation) and/or merge the annotations which different annotators made (Curation).

Third, you might want to integrate external knowledge used for annotating (Knowledge Bases).

You can do all three steps with

INCEpTION or only one or two.

In addition, INCEpTION is extendable and adaptable to individual requirements.

Often, it provides predefined elements, like knowledge bases, layers and tagsets to give you a starting point but you can also modify them or create your own from scratch.

You may for example integrate a knowledge base of your choice; create and modify custom knowledge bases; create and modify custom layers and tagsets to annotate your individual task; build custom so-called recommenders which automatically suggest annotations to you so you will work quicker and easier; and much more.

Getting Started focuses on annotating. For details on any other topic like Corpus Creation or the like, see the main documentation part of this User Guide: Core Functionalities.

Do you have questions or feedback?

INCEpTION is still in development, so you are welcome to give us feedback and tell us your wishes and requirements.

-

For many questions, you find answers in the main documentation: Core Functionalities.

-

Consider our Google group inception users and mailing list: inception-users@googlegroups.com

-

You can also open an issue on Github.

See our documentation for further reading

Our main documentation consists of three distinct documents:

-

User Guide: If you only use INCEpTION and do not develop it, the User Guide beginning right after Getting Started is the one of your choice. If it does not answer your questions, don’t hesitate to contact us (see Do you have questions or feedback?).

| User Guide-Shortcuts: Whenever you find a blue question mark sign in the INCEpTION application, you may click on it to be linked to the respective section of the User Guide. |

-

Admin Guide: For information on how to set up INCEpTION for a group of users on a server and more installation details, see the Admin Guide.

-

Developer Guide: INCEpTION is open source. So if you would like to develop for it, the Developer Guide might be interesting for you.

All materials, including this guide, are available via the INCEpTION homepage.

Installing and starting INCEpTION

| Hey system operators and admins! If you install INCEpTION not for yourself, but rather install it for somebody else or for a group of users on a server, if want to perform a Docker-based deployment or need information on similarly advanced topics (logging, monitoring, backup, etc.) , please skip this section and go directly to the Admin Guide. |

Installing Java

In order to run INCEpTION, you need to have Java installed in version 11 or higher. If you do not have Java installed yet, please install the latest Java version e.g. from AdoptOpenJDK.

Download and start INCEpTION

In this section, we will download, open and log in to INCEpTION. After, we will download and import an Example Project:

Step 1 - Download: Download the .jar-file from our website by clicking on INCEpTION x.xx.x (executable JAR) (instead of “x.xx.x”, there will be the number of the last release). Wait a minute until it has been fully downloaded. That is, until the name of the downloaded folder ends on “.jar“, not on “.jar.part“ anymore.

| Working with the latest version: We recommend to always work with the latest version since we constantly add new features, improve usability and fix bugs. After downloading the latest version, your previous work will not be lost: within a new version you will generally find all your projects, documents, users etc. like before without doing anything. However, please consult the release notes on this beforehand. To be notified when a new version has been released, please check the website, subscribe to Github notifications or the Google group (see Do you have questions or feedback?). |

Step 2 - Open: There are two ways to open the application: Either by double-clicking on it or via the terminal.

Step 2a - Open via double-click: Now, simply double-click on the downloaded .jar-file. After a moment, a splash screen will display. It shows that the application is loading.

| In case INCEpTION does not start: If double-clicking the JAR file does not start INCEpTION, you might need to make the file executable first. Right-click on the JAR file and navigate through the settings and permissions. There, you can mark it as executable. |

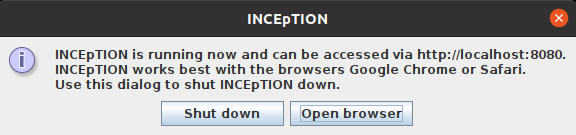

Once the initialization is complete, a dialog appears. Here, you can open the application in your default browser or shut it down again:

Step 2b - Open via terminal: If you prefer the command line, you may enter this command instead of double-clicking. Make sure that instead of “x.xx.x”, you enter the version you downloaded:

$ java -jar inception-app-standalone-x.xx.x.jarIn this case, no splash screen will appear. Just go to http://localhost:8080 in your browser.

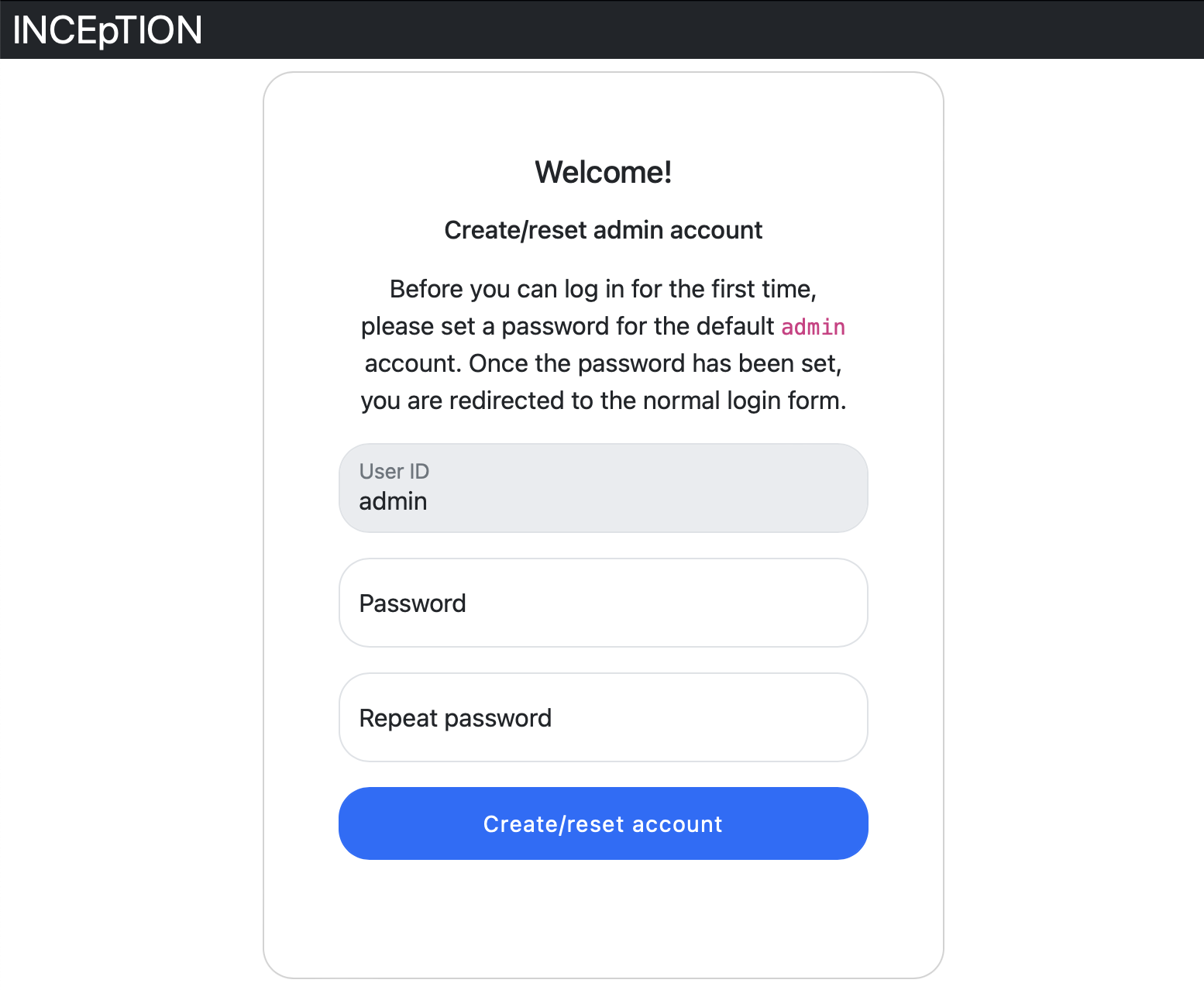

Step 3 - Log in: The first time you start the application, you will be asked to set a password for the default admin user. You need to enter this password into two separate fields. Only if the same password has been entered into both fields, it will be accepted and saved. After the password has been set, you will be redirected to the regular login screen where you can log in using the username admin and the password you have just set.

You have finished the installation.

| INCEpTION is designed for the browsers Chrome, Safari and Firefox. It does work in other browsers as well but for these three, we can support you best. For more installation details, see the Admin Guide. |

Download and import an Example Project

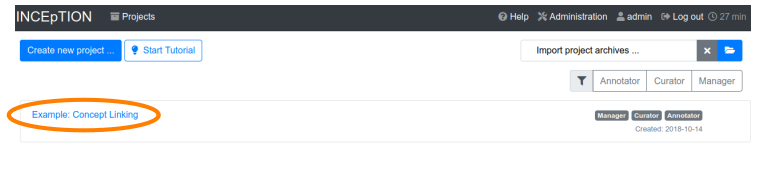

In order to understand what you read in this guide, it makes sense to have an annotation project to look at and click through. We created several example projects for you to play with. You find them in the section Example Projects on our website.

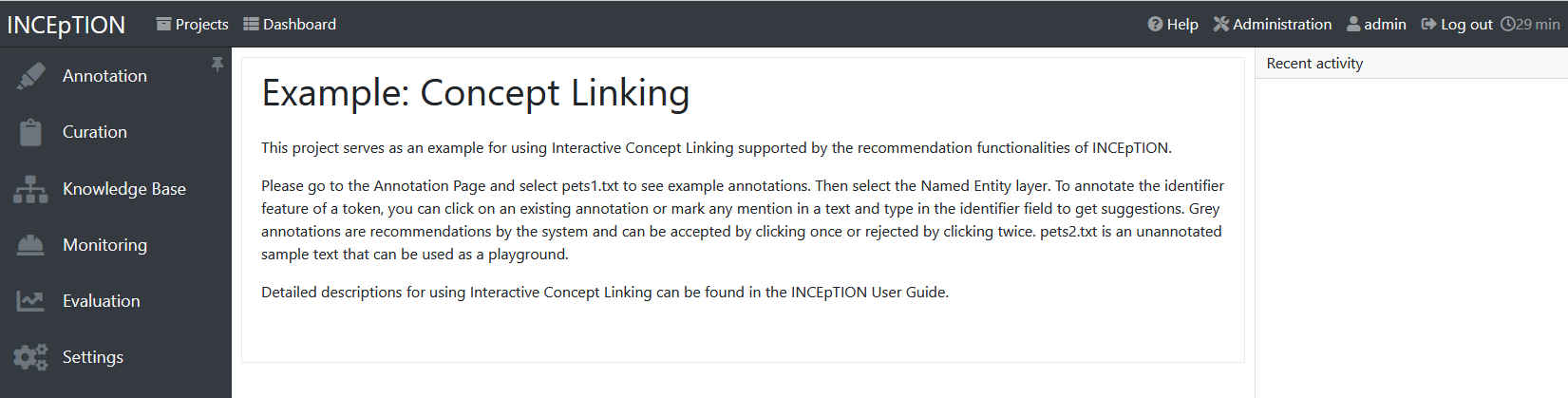

Step 1 - Download: For this guide, we use the Interactive Concept Linking project. Please download it from the Example Projects section on our website and save it without extracting it first. It consists of two documents about pets. The first one contains some annotations as an example, the second one is meant to be your playground. It has originally been created for concept linking annotation but in every project, you can create any kind of annotations. We will use it for Named Entity Recognition.

|

Named Entity Recognition: This is a certain kind of annotation.

In Getting Started, we use it to tell whether the annotated text part refers to a person (in INCEpTION, the built-in tag for person is PER), organization (ORG), location (LOC) or any other (OTH). The respective layer to annotate person/organization/location/other is the Named Entity layer. If you are not sure what layers are, check the box on Layers and Features in the section Project Settings. Also see Concept Linking in the User Guide. |

-

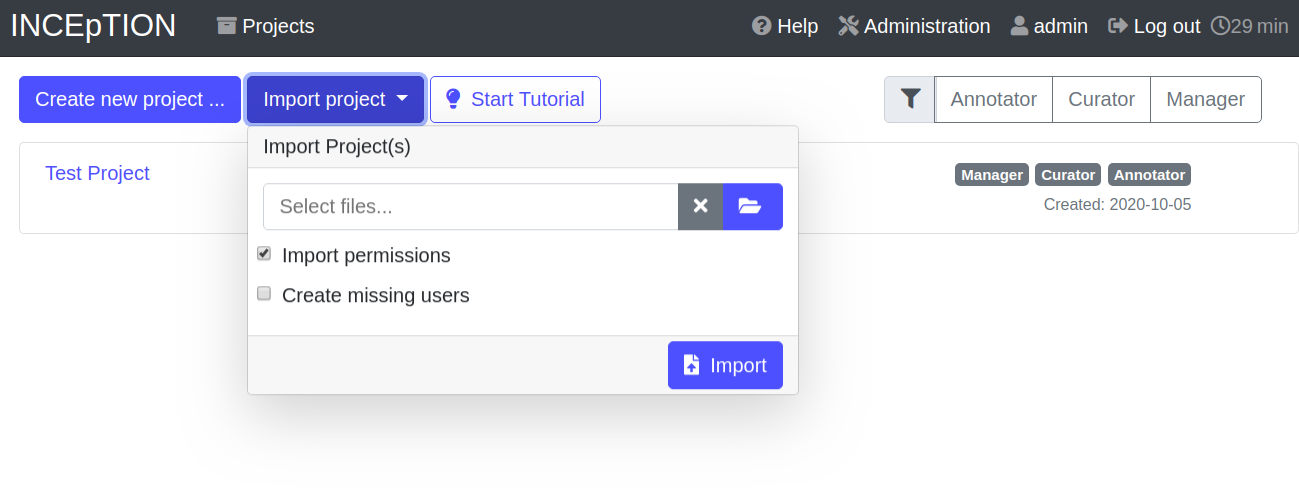

Step 2 - Import: After logging in to INCEpTION, click on the Import project button on the top left (next to Create new project) and browse for the example project you have downloaded in Step 1. Finally, click Import. The project has now been added and you can use it to follow the explanations of the next section.

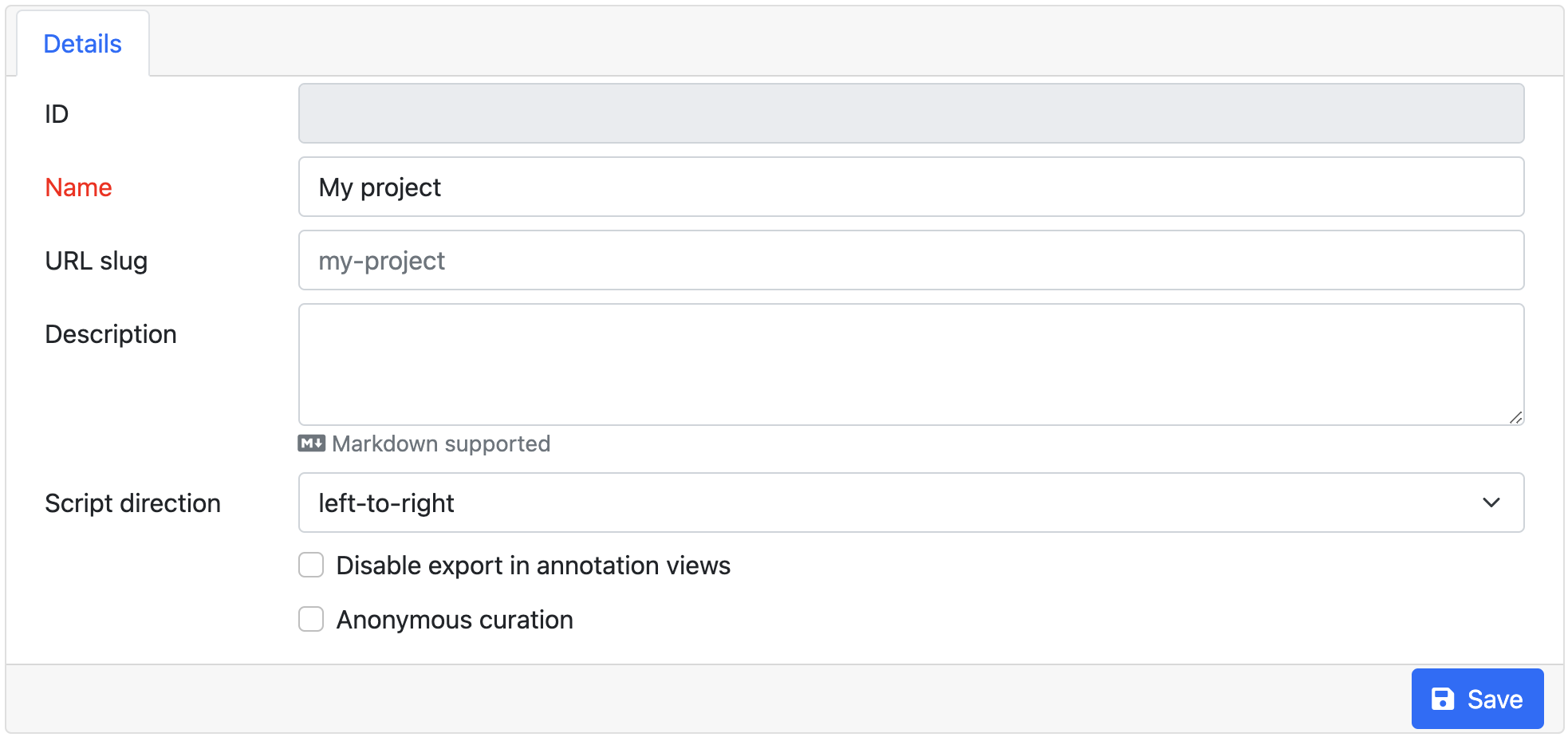

Project Settings

In this section we will see what elements each project has and where you can adjust these elements by examining the Project Settings. Note that you may have different projects in INCEpTION at the same time.

If you prefer to make some annotations first, you may go on with First Annotations with INCEpTION and return later.

Each project consists at least of the following elements. There are more optional elements such as tagsets, document repositories etc. but to get started, we will focus on the most important ones:

-

one or (usually) more Documents to annotate

-

one or (usually) more Users to work on the project

-

one or (usually) more Layers to annotate with

-

Optional: one or more Knowledge Base/s

-

Optional: Recommenders to automatically suggest annotations

-

Optional: Guidelines for you and your team

For a quick overview on the settings, you might want to watch our tutorial video Overview. As for all topics of Getting Started, you will find more details on each of them in the main documentation on INCEpTION’s Core Functionalities.

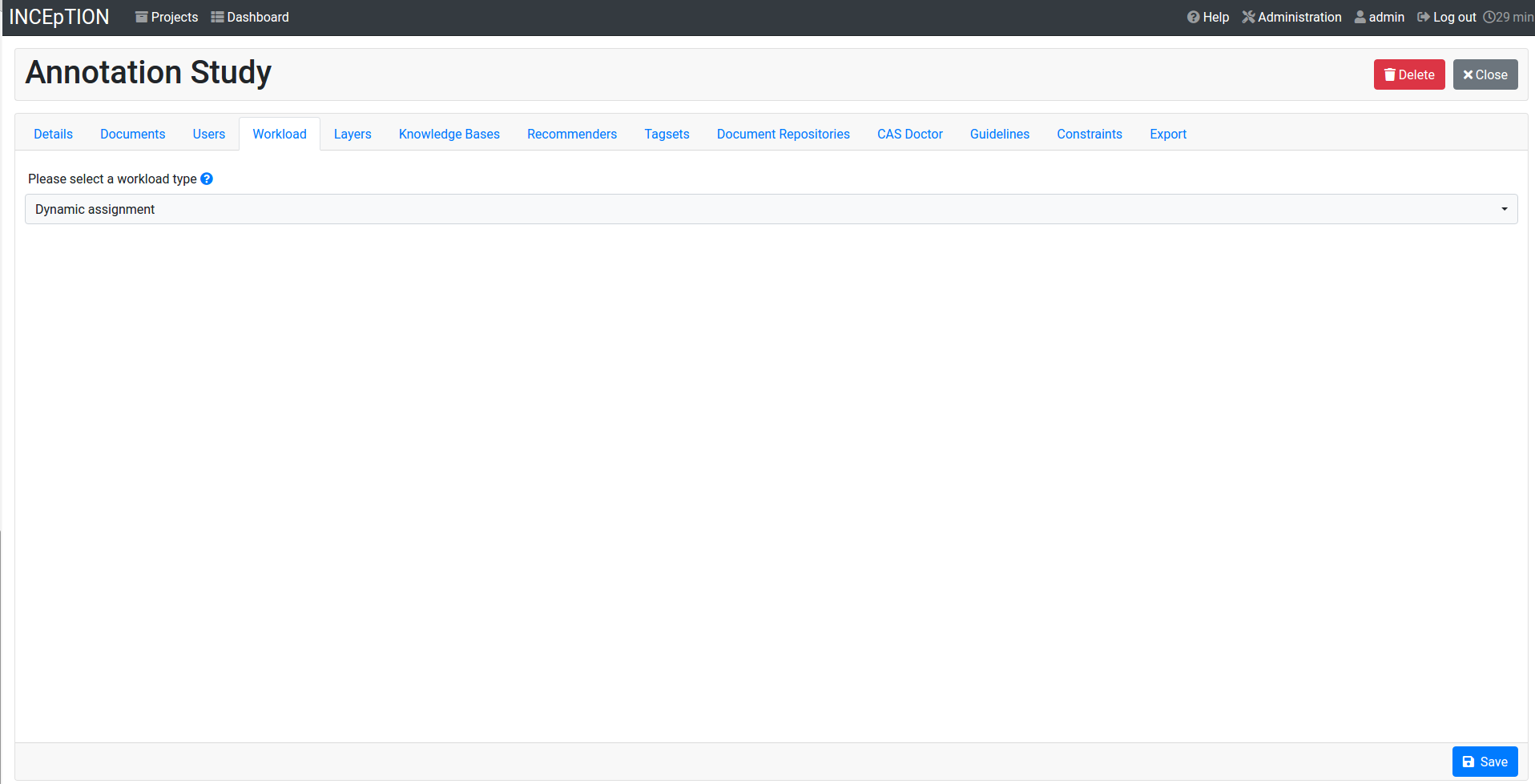

The Settings provide a tab for each of these elements. There are more tabs but we focus on the most important ones to get started. You reach the settings after logging in when you click on the name of a project and then on Settings on the left. If you have not imported the example project yet, we propose to follow the instruction in Download and import an Example Project first.

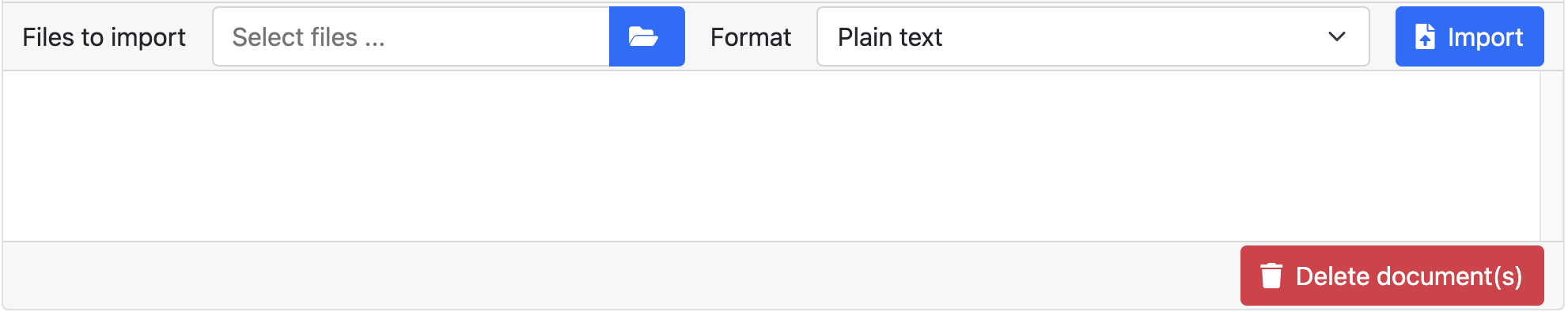

Documents

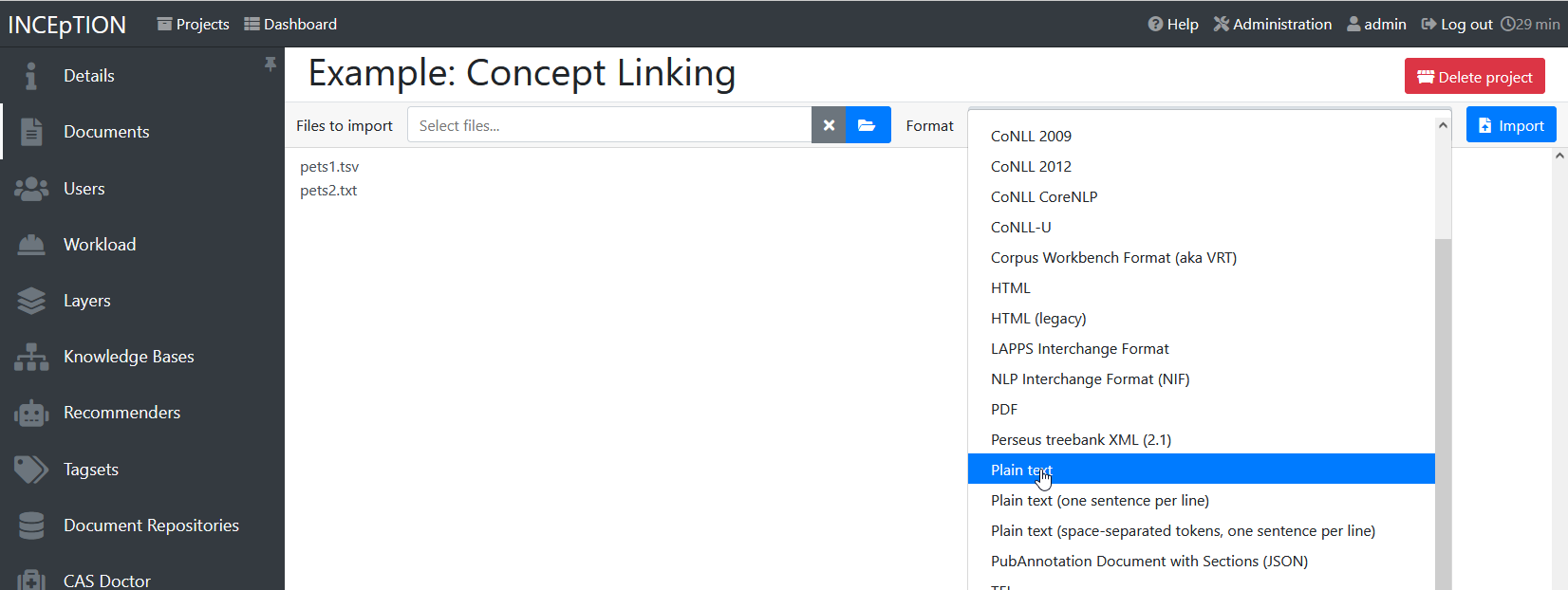

Here, you may upload your files to be annotated. Make sure that the format selected in the dropdown on the right is the same as the one of the file to be uploaded.

| Formats: For details on the different formats INCEpTION provides for importing and exporting single documents as well as whole projects, you may check the main documentation, Appendix A: Formats. |

|

INCEpTION Instance vs.

Project: In some cases, we have to distinguish between the INCEpTION instance we are working in and the project(s) it contains. For example, a user may be added to the INCEpTION instance but not to a certain project. Or she may have different rights in several projects. |

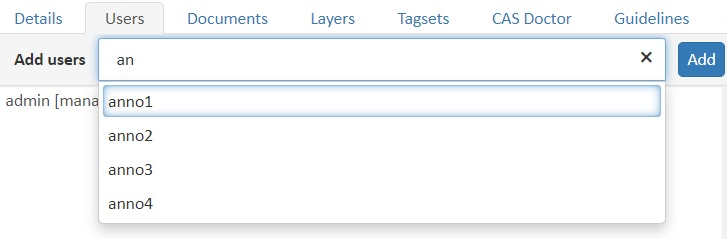

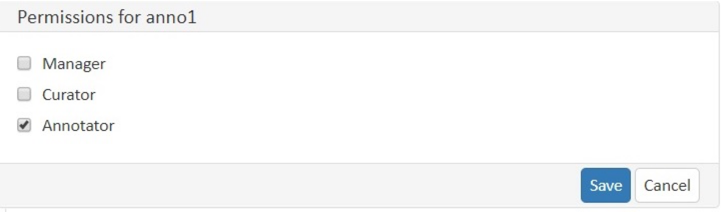

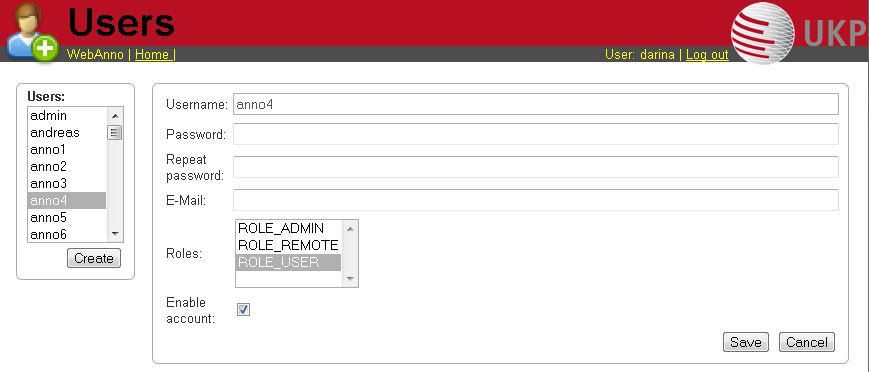

Users

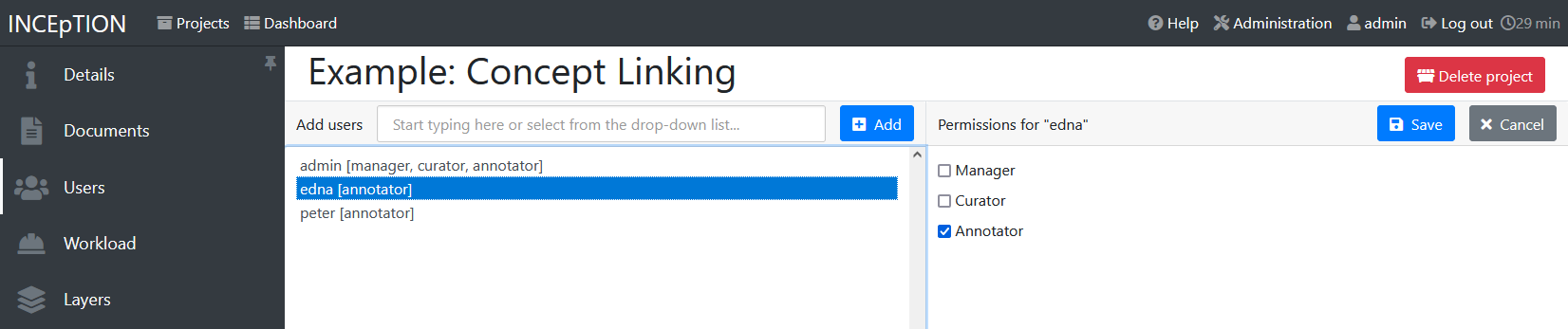

Here, you may add users to your project and change their rights within that project. You can only add users to a project from the dropdown at the left if they exist already in the INCEpTION instance.

-

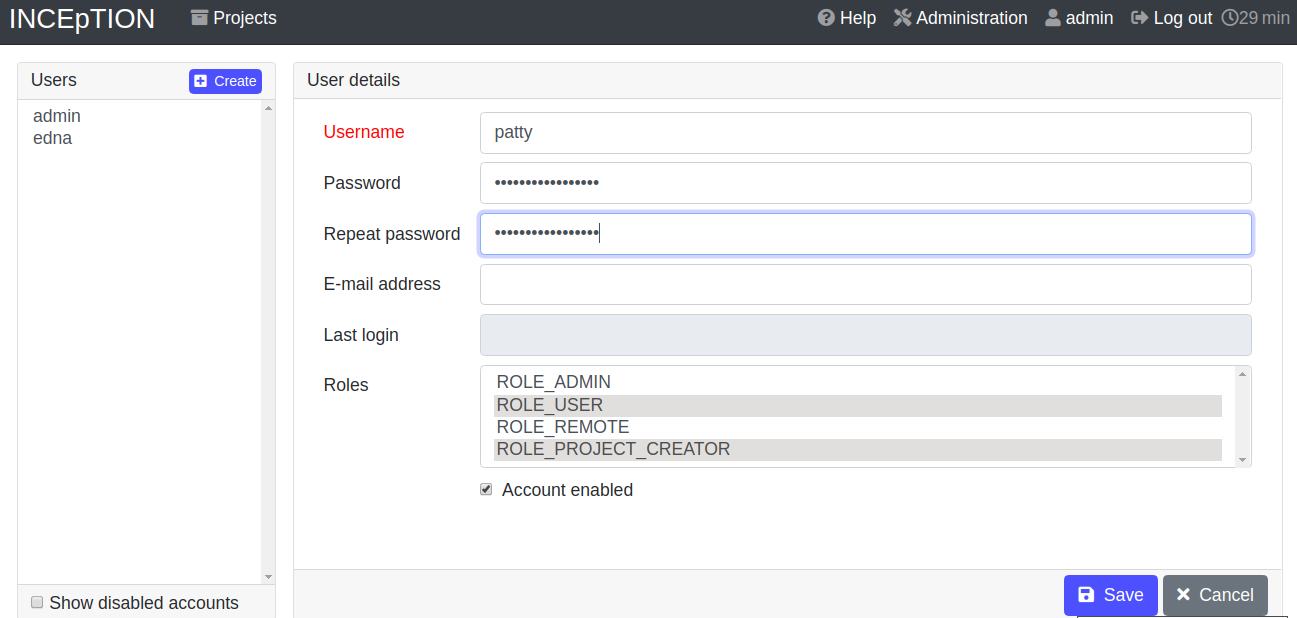

Add new users: In order to find users for a project in the dropdown, you need to add them to your INCEpTION instance first. Click on the administration button in the very top right corner and select section Users on the left. For user roles (for an instance of INCEpTION) see the User Management in the main documentation.

-

Giving rights to users: After selecting a user from the dropdown in the project settings section Users, you can check and uncheck the user’s rights on the right side. User rights count for that project only and are different from user roles which count for the whole INCEpTION instance. Any combination of rights is possible and the user will always have the sum of all rights given.

User Right Description Access to Dashboard Sections Annotator

- annotate only

- Annotation

- Knowledge BaseCurator

- curate only

- Curation

- Workload

- Agreement

- EvaluationManager

- annotate

- curate

- create projects

- add new documents

- add guidelines

- manage users

- open annotated documents of other users (read only)- All pages

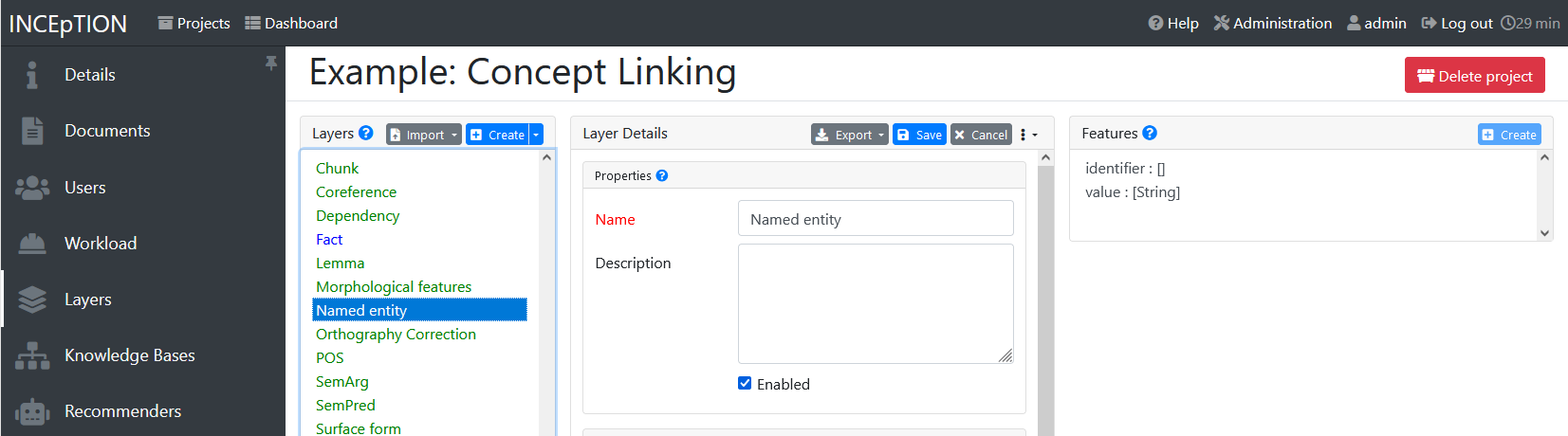

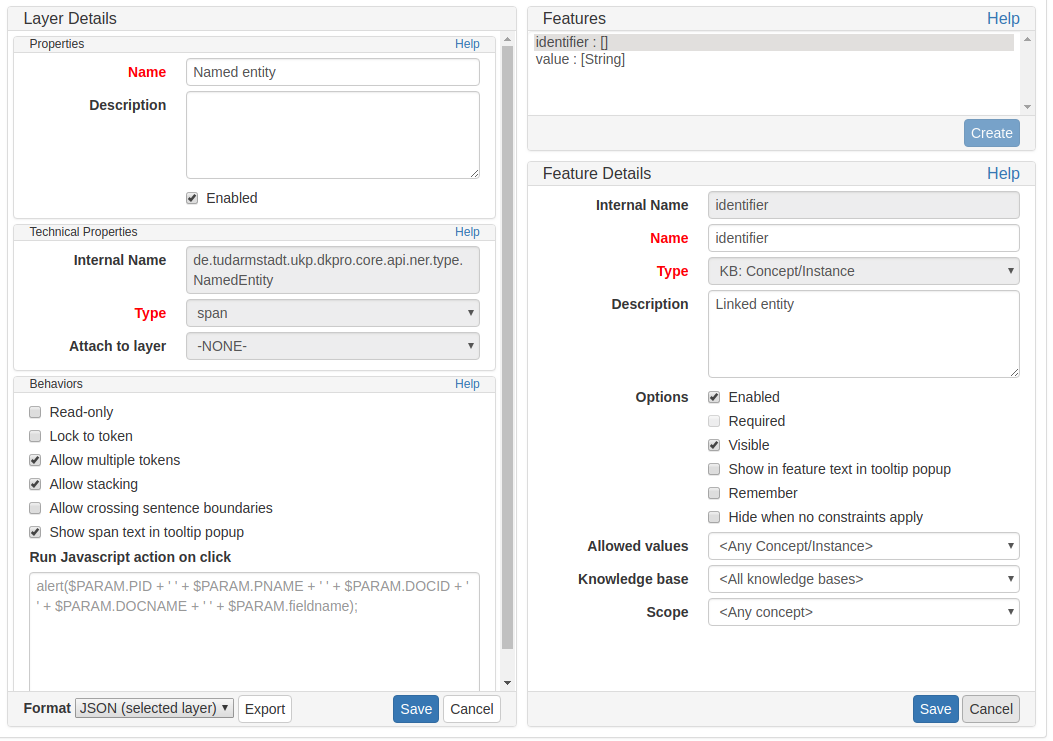

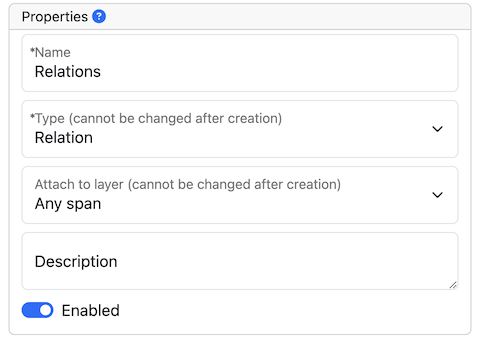

Layers

In this section, you may create custom layers and modify them later. Built-in layers should not be changed. In case you do not want to work on built-in layers only but wish to create custom layers designed for your individual task, we recommend reading the documentation for details on Layers.

|

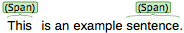

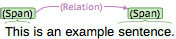

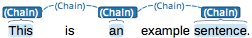

Layers and Features: There are different “aspects” or “categories” you might want to annotate.

For example, you might want to annotate all the places and persons in a text and link them to a knowledge base entry (see the box about Knowledge Bases) to tell which concrete place or person they are.

This type of annotation is called Named Entity.

In another case, you might want to annotate which words are verbs, nouns, adjectives, prepositions and so on (called Parts of Speech).

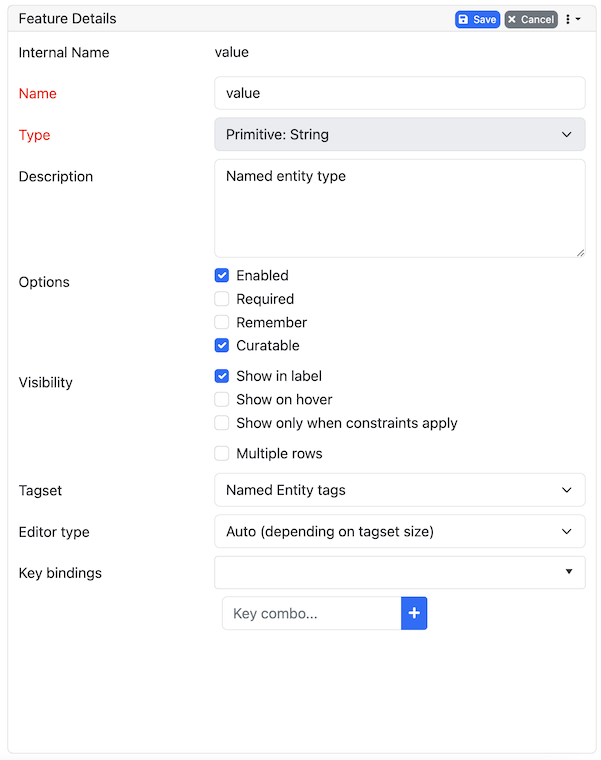

What we called “aspects”, “categories” or “ways to annotate” here, is referred to as layers in INCEpTION as in many other annotation tools, too. INCEpTION supports span layers in order to annotate a span from one character (“letter”) in the text to another, relation layers in order to annotate the relation between two span annotations and chain layers which are normally used to annotate coreferences, that is, to show that different words or phrases refer to the same person or object (but not which one). A span layer annotation always anchors on one span only. A relation layer annotation always anchors on the two span annotations of the relation. Chains anchor on all spans which are part of the chain. For span layers, the default granularity is to annotate one or more tokens (“words”) but you can adjust to character level or sentence level in the layer details (see Layers in the main documentation; especially Properties). Each layer provides appropriate fields, so-called features, to enter a label for the annotation of the selected text part. For example, on the Named Entity layer in INCEpTION, you find two feature-fields: value and identifier. In value, you can enter what kind of entity it is (“LOC” for a location, “PER” for a person, “ORG” for an organization and “OTH” for other). In identifier you can enter which concrete entity (which must be in the knowledge base) it is. For the example “Paris”, this may be the French capital; the person Paris Hilton; a company named “Paris” or something else. INCEpTION provides built-in layers with built-in features to give you a starting point. Built-in layers cannot be deleted as custom layers can. However, new features can be added. See the main documentation for details on Layers, features, the different types of layers and features, how to create custom layers and how to adjust them for your individual task. |

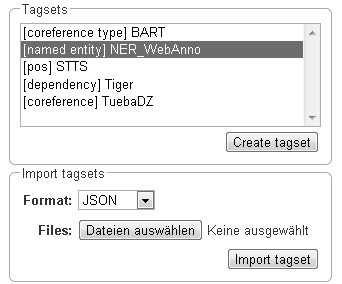

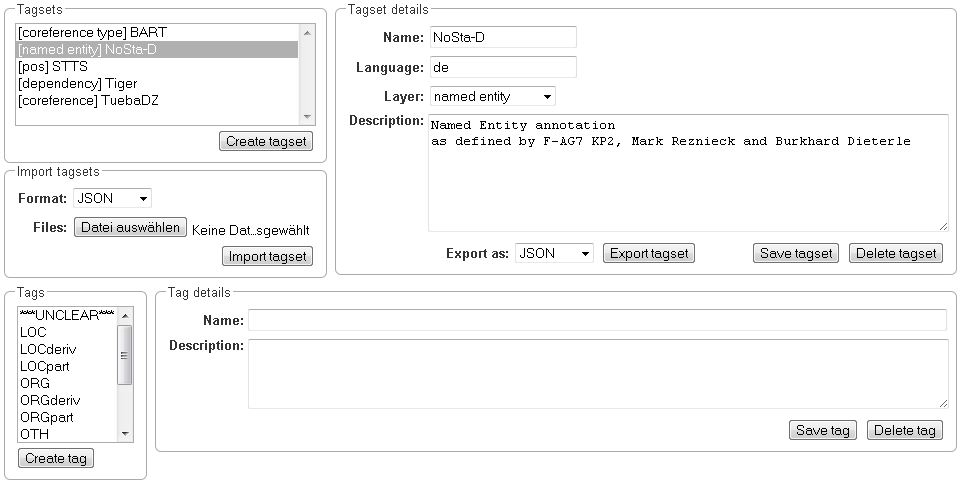

Tagsets

Behind this tab, you can modify and create the tagsets for your layers. Tagsets are always bound to a layer, or more precisely to a certain feature of a layer.

| Tagsets: In order for all annotations to have consistent labels, it is preferable to use defined tags which can be given to the annotations. If users do not enter free text for a label but stick to predefined tags, they avoid different names for the same thing and varying spelling. A set of such defined tags is called a tagset i.e. a collection of labels which can be used for annotation. INCEpTION comes with predefined tagsets out of the box and they serve as a suggestion and starting point only. You can modify them or create your own ones. |

|

Feature Types: The tags of your tagset must always fit the type of the feature for which it will be used.

The feature type defines what type of information the feature can be, for example “Primitive: Integer” for whole numbers, “Primitive: Float” for decimals; “Primitive: Boolean” for a true/false label only; the most common one “Primitive: String” for text labels or “KB: Concept/Instance/Property” if the feature shall link to a knowledge base.

There are more types for features but these are the most important ones for you to know. Changing the type does only work for custom features, not for built-in features. In order to do so, scroll in the Feature Details panel (in the Layers tab) until you see the field Type and select the type of your choice. If a tagset shall be linked to a feature, they must have the same type. For more details, see the Features in the main documentation. |

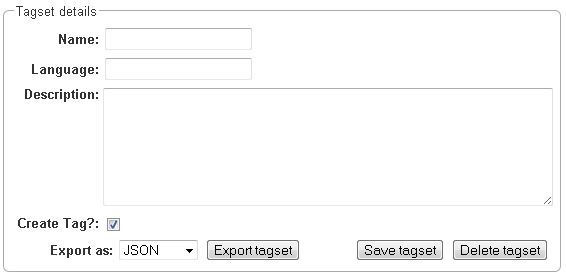

-

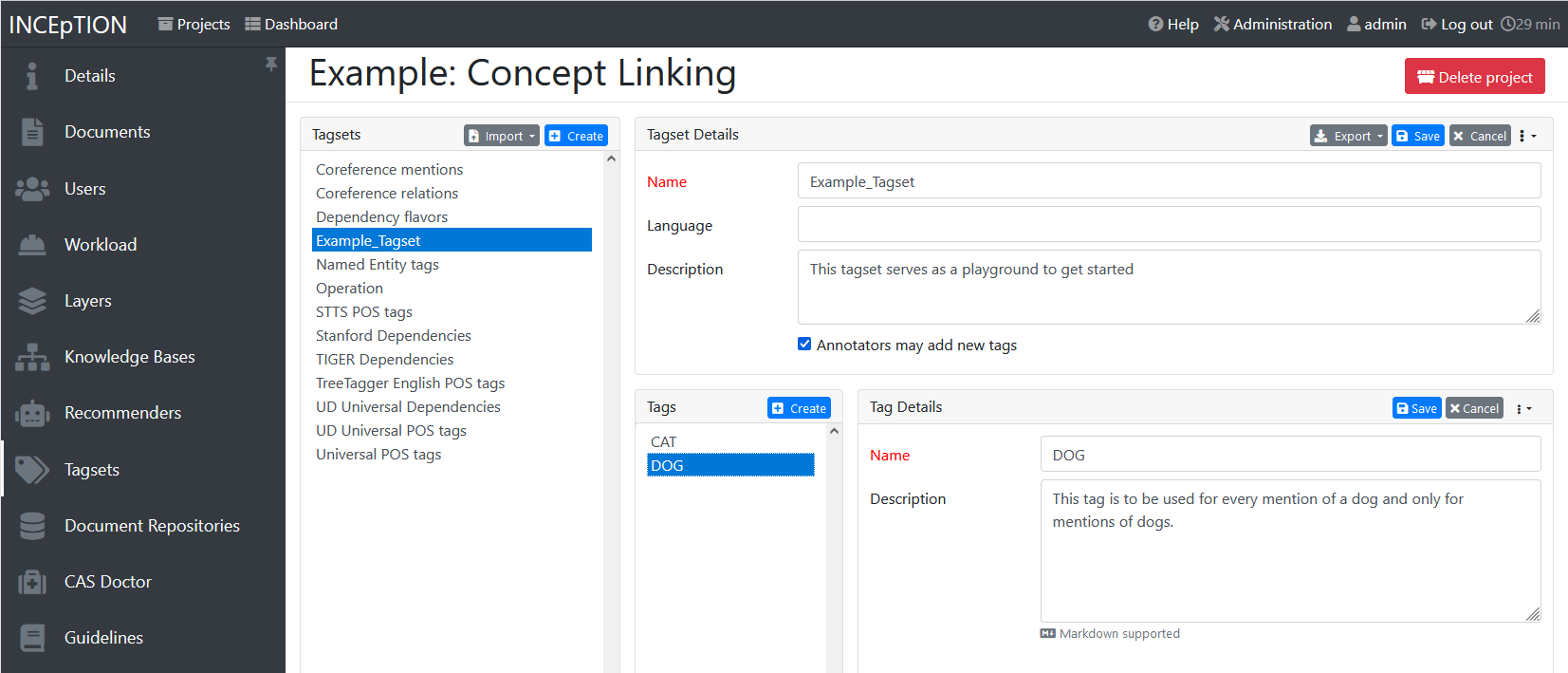

In order to create a new tagset, click on the blue create button on top. Enter a name for it and - not technically necessary but highly recommended to avoid misunderstandings - a speaking description for the tagset. As an example, let’s choose “Example_Tagset” for the name and “This tagset serves as a playground to get started.” for the description. Check or uncheck Annotators may add new tags as you prefer. Now, click on the blue save-button.

-

In order to fill your tagset with tags, first choose the set from the list on the left. Then, click on the blue create-button in the Tags panel at the bottom. A new panel called Tag Details opens right beside it. Enter a name and description for a tag. Let’s have “CAT” for the name and “This tag is to be used for every mention of a cat and only for mentions of cats.” for the description. Click the save-button and the tag has now been added to your set. As another example, create a new tag for the name “DOG” and description “This tag is to be used for every mention of a dog and only for mentions of dogs.”.

-

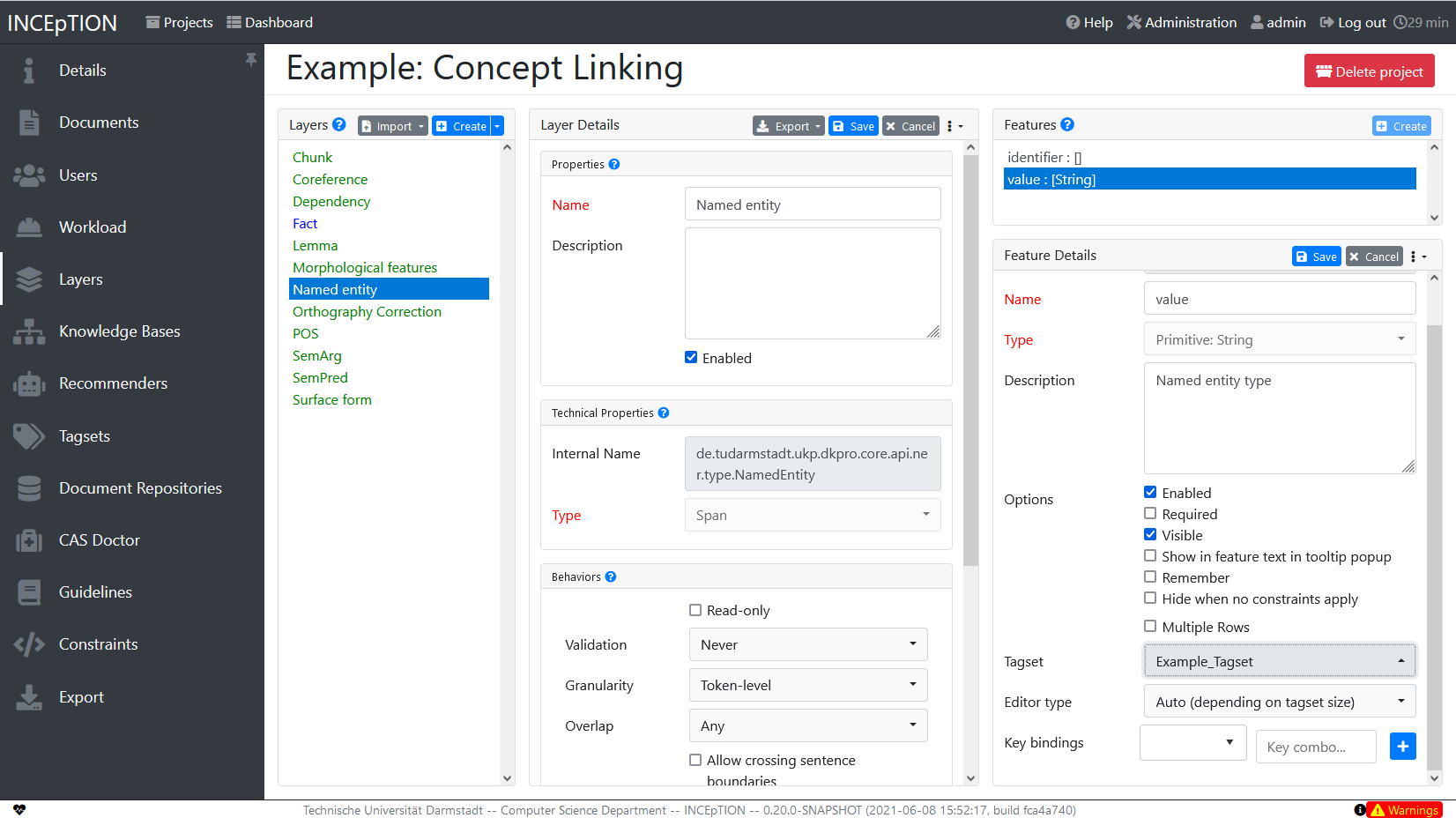

In order to use the tagset, it is necessary to link it to a layer and feature. Herefore, click on the Layers tab and select the layer from the list at the left. As an example, let’s select the layer Named entity. Two new panels open now: Layer Details and Features. We focus on the second one. Choose the feature your tagset is made for. In this example, we choose the feature value. When you click on it, the panel Feature details opens. In this panel, scroll down to Tagset and choose your tagset (to stick with our example: Example_Tagset) from the dropdown and click Save. The tagset which was selected before is not linked to the layer any more but the new one is.

-

From now on, you can select your tags for annotating. Navigate to the annotation page (click INCEpTION on the top left → Annotation and choose the document pets2.txt). On the layer dropdown on the right, choose the layer Named entity. When you double-click on any part in the text, for example “Socke” in line one, and click on the dropdown value on the right, you find the tags “DOG” and “CAT” to choose from. (For details on how to annotate, see First Annotations with INCEpTION).

-

You might want to link Named Entity tags again to the Named entity Layer and value feature in order to use them like they were before our little experiment.

-

For more details on Tagsets, see the main documentation, Tagsets.

-

Note: Tagsets can be changed and deleted. But the annotations they have been used for will remain with the same tag though. Other than the built-in layers, built-in tagsets can also be deleted.

| Saving: Some steps, like annotations, are saved automatically in INCEpTION. Others need to be saved manually. Whenever there is a blue Save button, it is necessary to click it to save the work. |

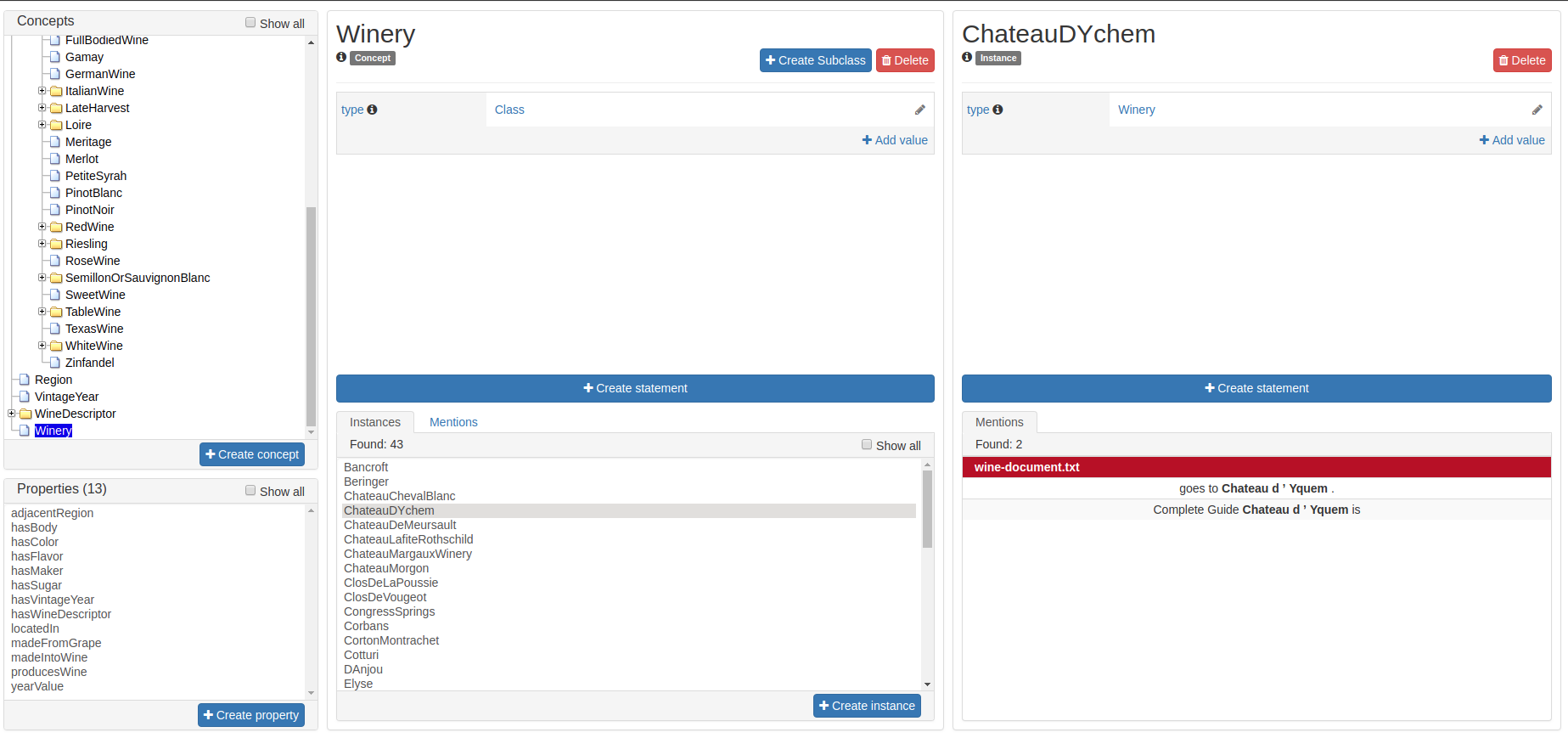

Knowledge Bases

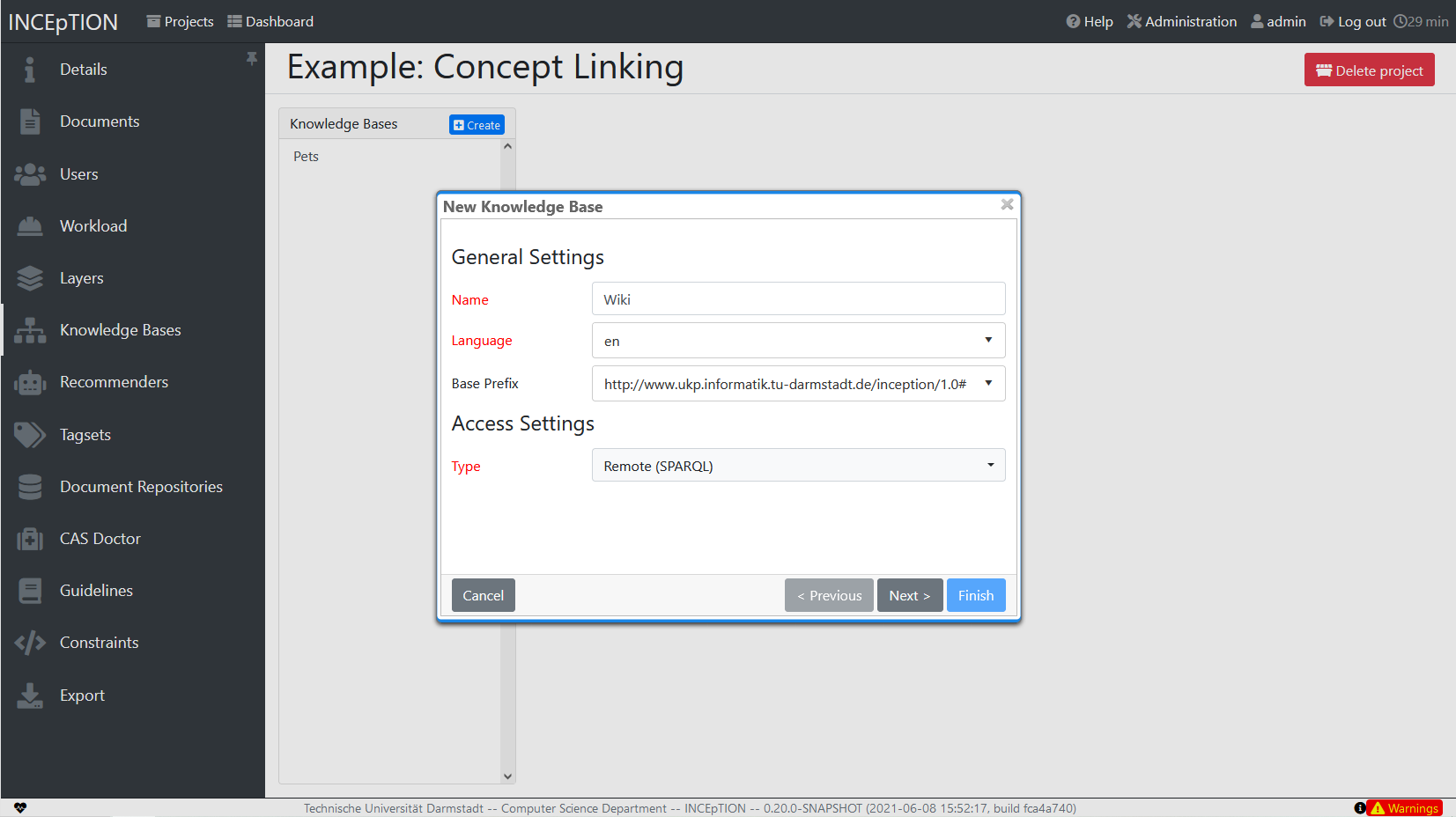

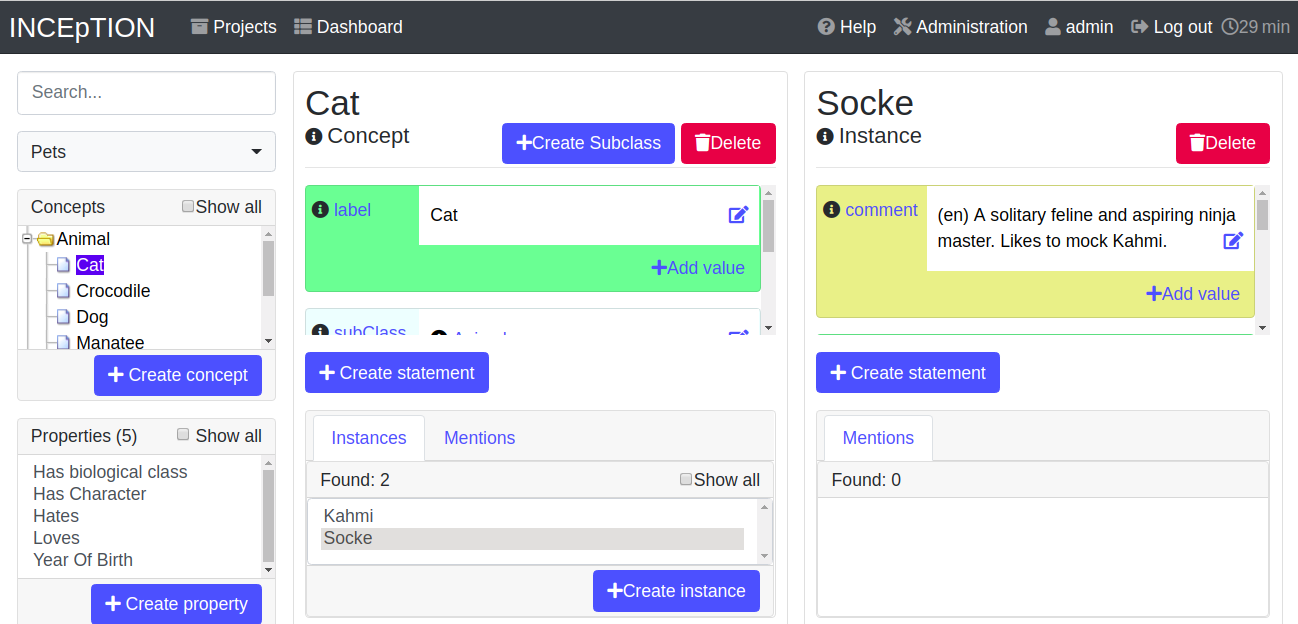

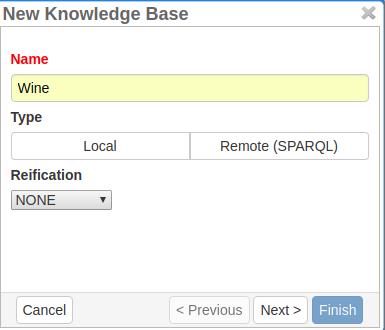

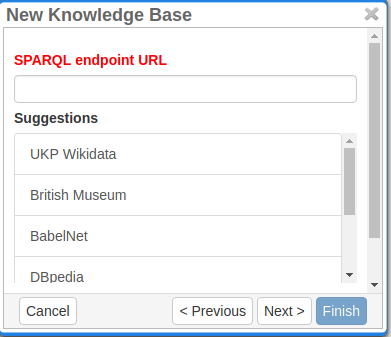

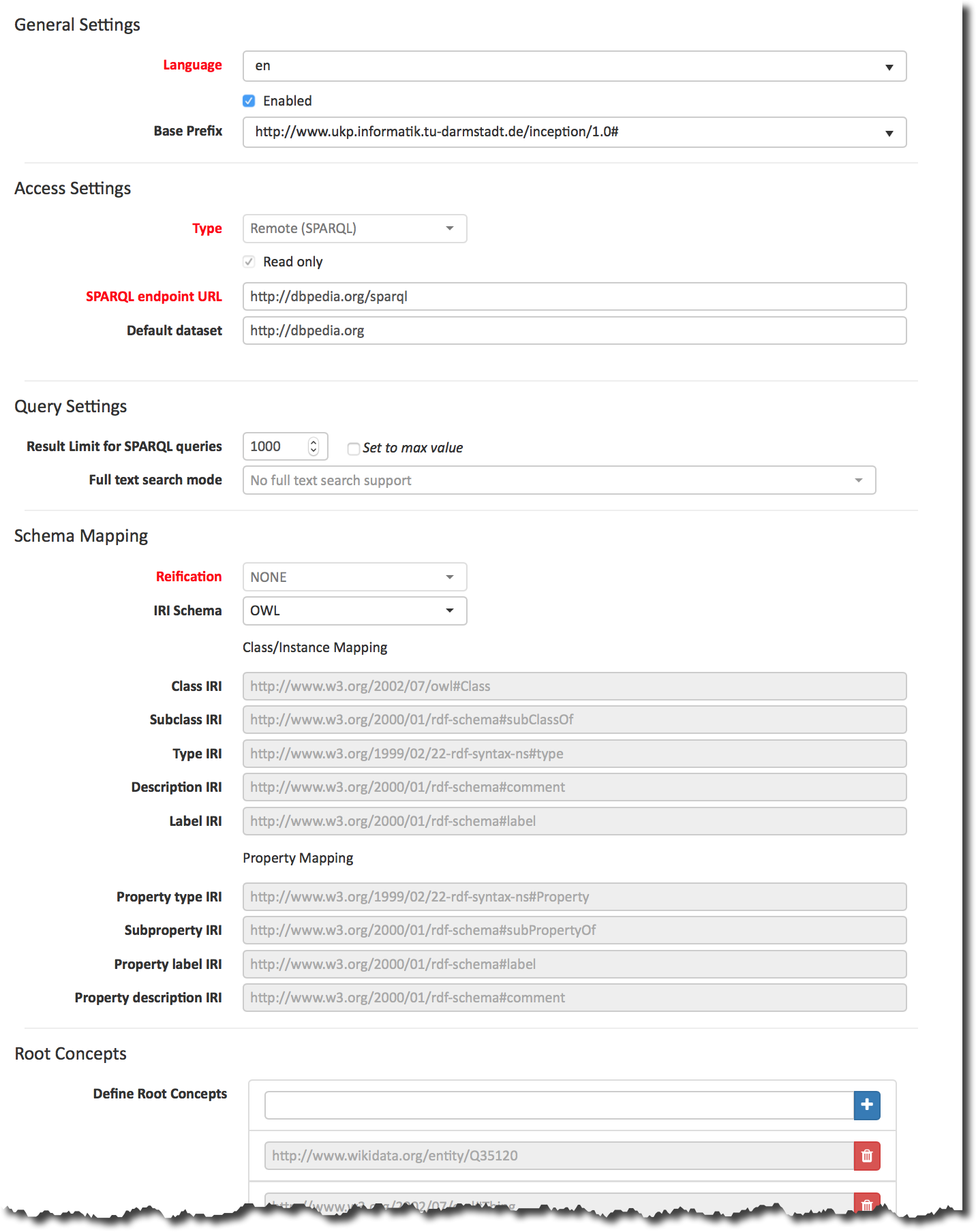

In this section, you can change the settings for the knowledge bases used in your project, you can import local and remote knowledge bases into your project and you can create a custom knowledge base. The latter will be empty at first. It will not be filled here in the settings but at the knowledge base page ( → Dashboard, → Knowledge base; also see the part Knowledge Base in Structure of an Annotation Project). In order to import or create a knowledge base, just click the Create button and INCEpTION will lead you.

|

Knowledge Bases are data bases for knowledge.

Let’s assume, the mention “Paris” is to be annotated.

There are many different Parises - persons, the capital city of France and more - so the annotation is to tell clearly what entity with the name “Paris” is meant here.

Herefore, the knowledge base needs to have an entry of the correct entity.

In the annotation, we then want to make a reference to that very entry. There are knowledge bases on the web (“remote”) which can be used with INCEpTION like e.g. WikiData. You can also create your own, new knowledge bases and use them in INCEpTION. They will be saved on your device (“local”). |

-

Note that you can have several knowledge bases in your INCEpTION instance but you can choose for every project which one(s) to use. Using many little knowledge bases in one project will slow down the performance more than few big ones.

-

Via the Dashboard (click the Dashboard-button at the top centre), you get to the knowledge base page. This is a page different from the one in the project settings where you can modify and work on your knowledge bases.

-

For details on knowledge bases, see our main documentation on Knowledge Bases, or our tutorial video “Overview“ mentioning knowledge bases.

-

If you like to explore a knowledge base check the example project we have downloaded and imported before. It contains a small knowledge base, too.

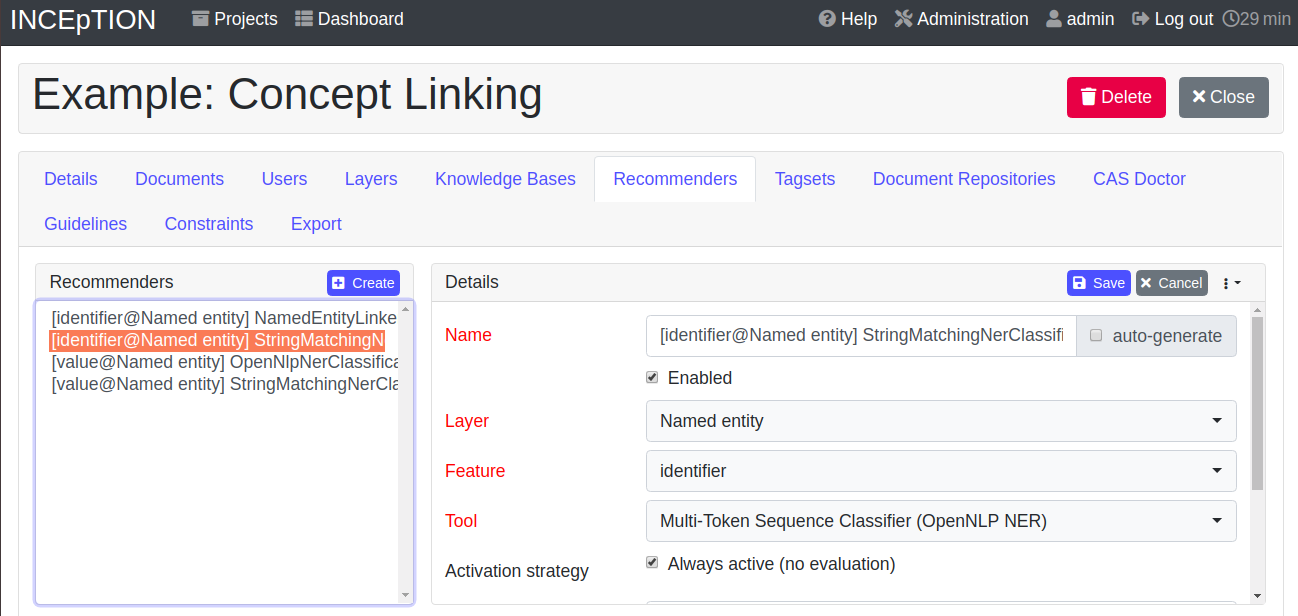

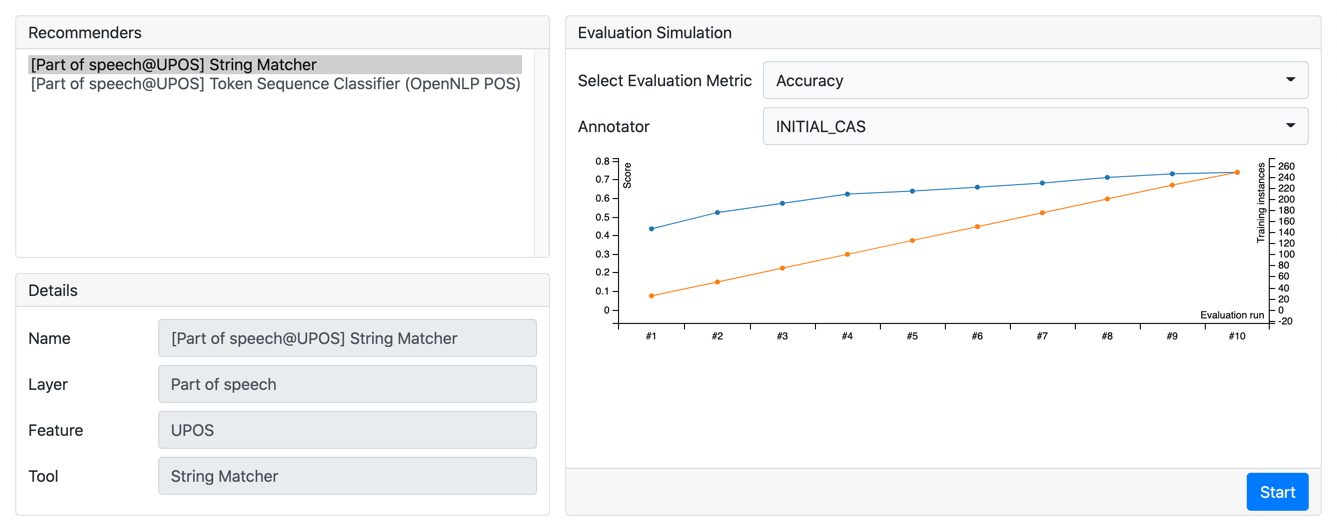

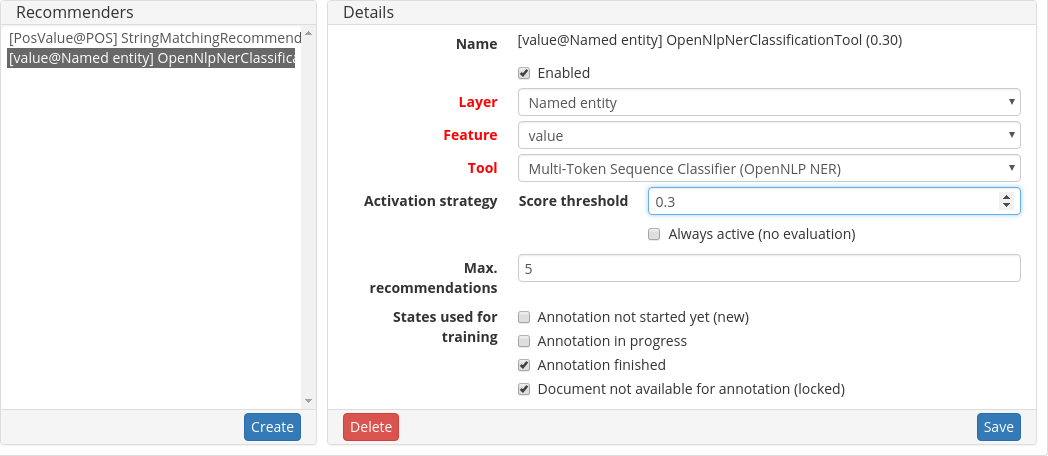

Recommenders

In this section, you can create and modify your recommenders. They learn from what the user annotates and give suggestions. For details on how to use recommenders, see our main documentation on Recommenders in the Annotation section. For details on how to create and adjust them, see Recommenders in the Projects section. Or check the tutorial video “Recommender Basics”.

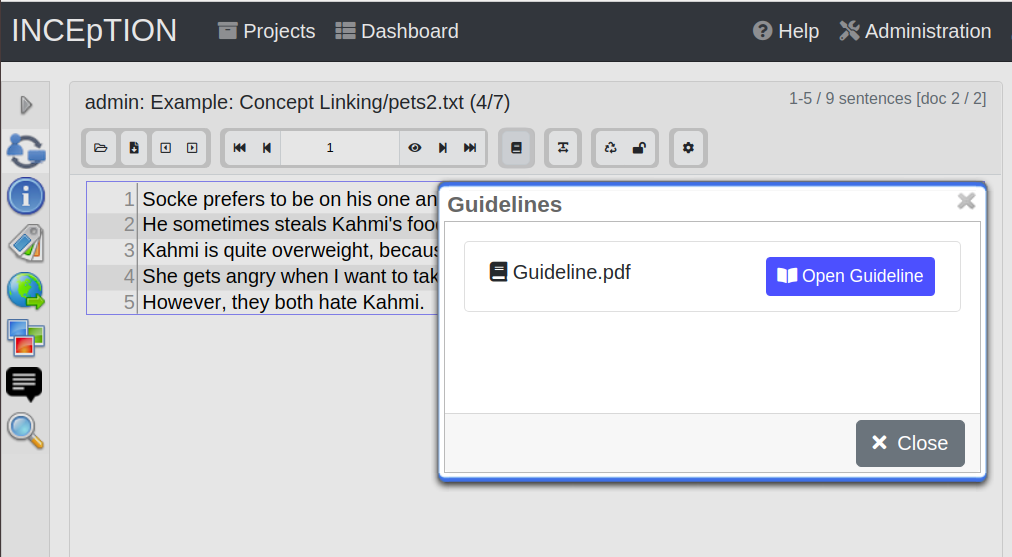

Guidelines

In this section, you may import files with annotation guidelines. There is no automatic correction or warning from INCEpTION if guidelines are violated but it is a short way for every user in the project to read and check the team guidelines while working. On the annotation page (→ Dashboard → Annotation → open any document), annotators can quickly look them up by clicking on the guidelines button on the top which looks like a book (this button only appears if at least one guideline was imported).

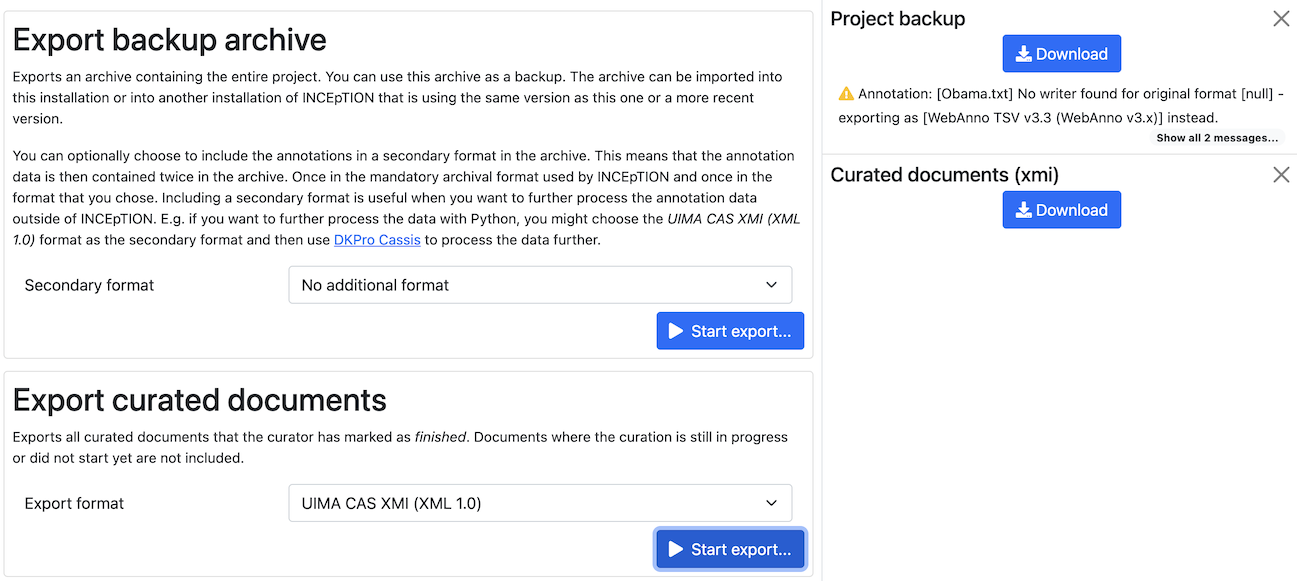

Export

In this section, you can export your project partially or wholly. Projects which have been exported can be imported again in INCEpTION the way we did with our example project in section Download and import an Example Project: at the start page with the Import button. We recommend exporting projects on a regular basis in order to have a backup. For the different formats, their strengths and weaknesses, check the main documentation, Appendix A: Formats. We recommend using WebAnno TSV x.x (where “x.x.” is the highest number available, e.g. 3.2) whenever possible. Since it has been created specially for this application, it will provide all features required. However, many other formats are provided.

Structure of an Annotation Project

Here, we will find out what you can do in each project having a look at the Structure of an Annotation Project. Therefore, we examine the dashboard.

If you are in a project already, click on the dashboard button on the top to get there. If you just logged in, choose a project by clicking on its name. As you are a Project Manager (see User Rights), you see all of the following sub pages. For details on each section, check the section on Core Functionalities.

Annotation

If you went to First Annotations with INCEpTION before, you have been here already. Here, the annotators can go to annotate the texts.

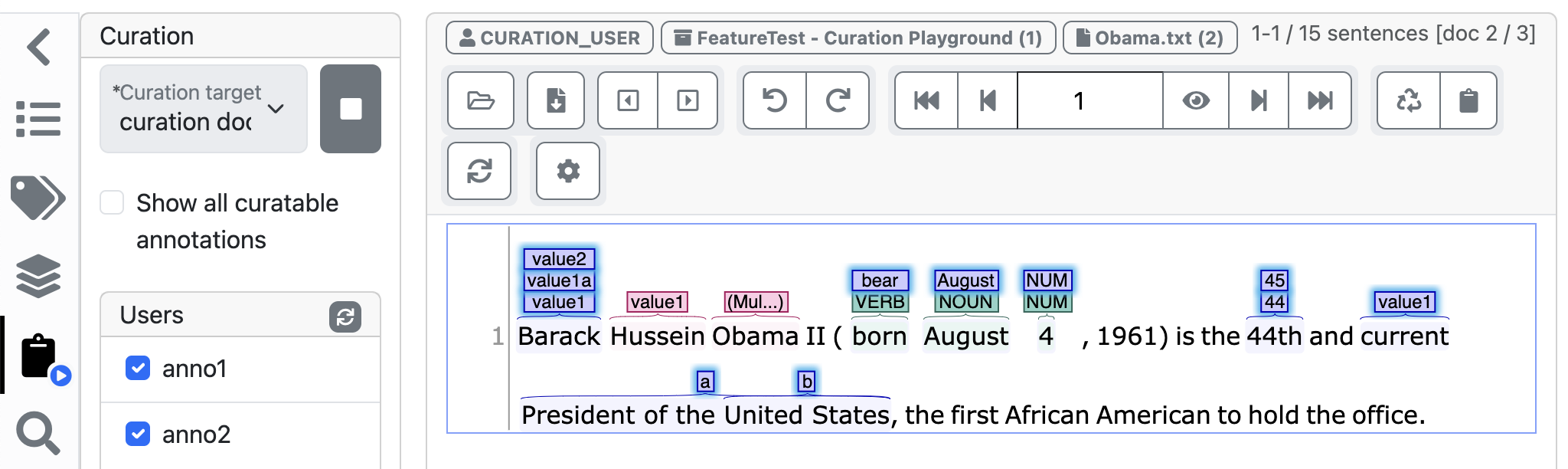

Curation

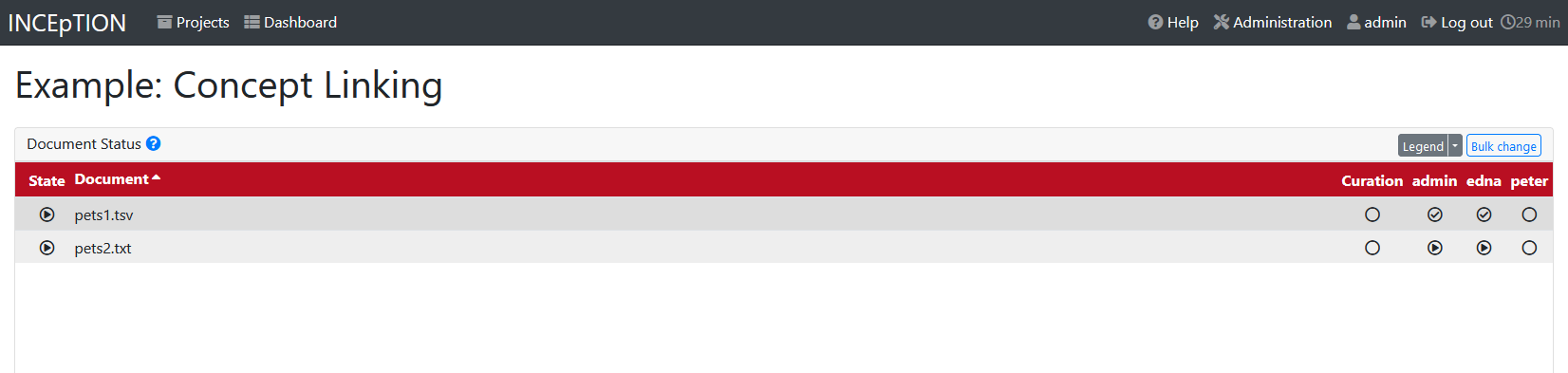

Everyone with curation rights (see User Rights) within a project can curate it. All other users do not have access to nor see this page. Only documents marked as finished by at least one annotator can be curated. For details on how to curate, see the main documentation → Curation or just try it out:

| Curation: If several annotators work on a project, their annotations usually do not match perfectly. During the process called "Curation", you decide which annotations to keep in the final result. |

-

Create some annotations in any document

-

Mark the document as finished: Just click on the lock on top.

-

Add another user, just for testing this (see Users in the section Project Settings).

-

Log out and log in again as the test user.

-

In the very same document, make some annotations which are the same and some which are different than before. Mark the document as finished.

-

Log in as any user with curation rights (e.g. as the “admin” user we used before), enter the curation page and explore how to curate: You see the automatic merge on top (what both users agreed on has been accepted already) and the annotations of each of the users below. Differences are highlighted. You can accept an annotation by clicking on it.

-

As a curator, you can also create new annotations on this page. It works exactly like on the Annotation page. Note that users who have nothing but curation rights do not see nor have access to the annotation page (see User Rights).

Knowledge Base

Also see the section on knowledge bases in the project settings. On the Knowledge Base page, you can manage and create your knowledge base(s) for the project you are in. You can create new knowledge bases from scratch, modify them and integrate existing knowledge bases into your project which are either local (that is, they are saved on your device) or remote (that is, they are online). Note that this knowledge base page is distinct from the tab of the same name in the project settings (see Knowledge Base in section Project Settings).

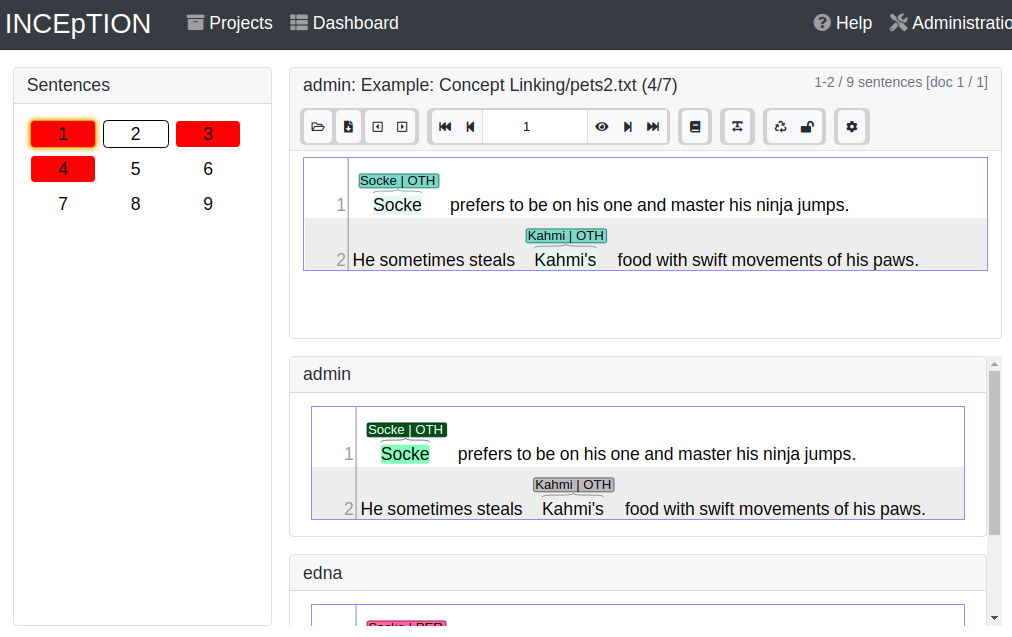

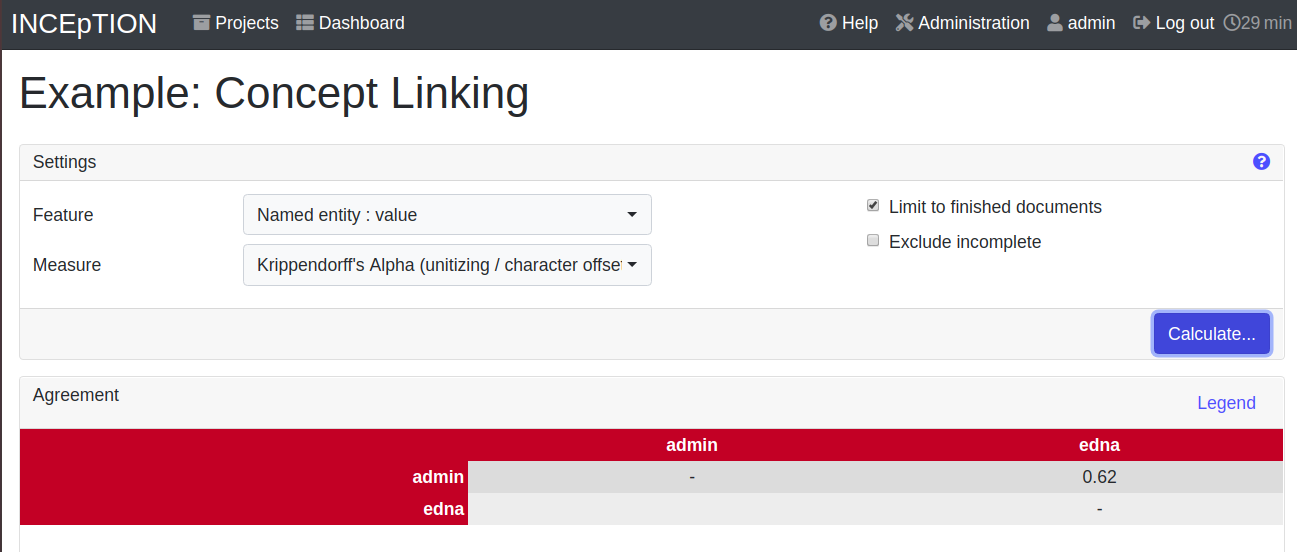

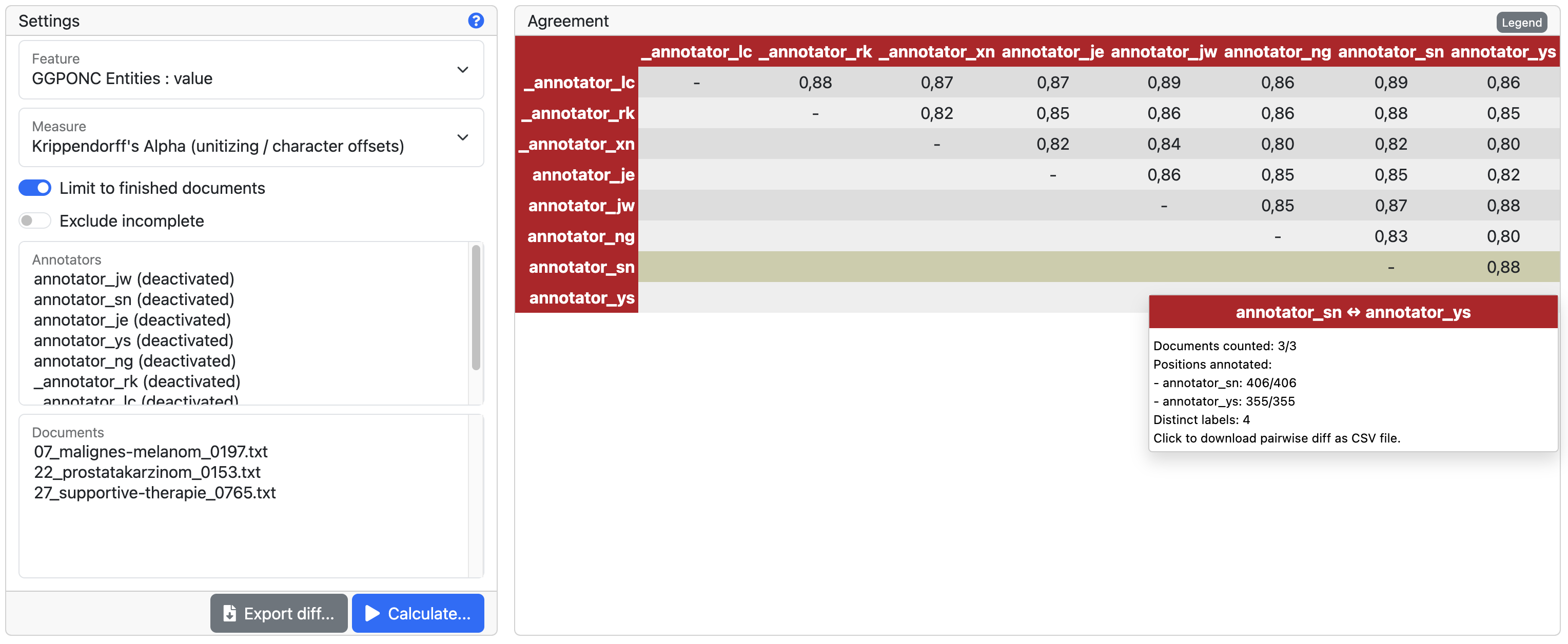

Agreement

On this page, you can calculate the annotator agreement. Note: Only documents marked as finished by annotators (clicking on the little lock on the annotation page) are taken into account.

| Agreement: The annotations of different annotators usually do not match perfectly. This aspect of difference / similarity is called agreement. For agreement, some common measures are provided. |

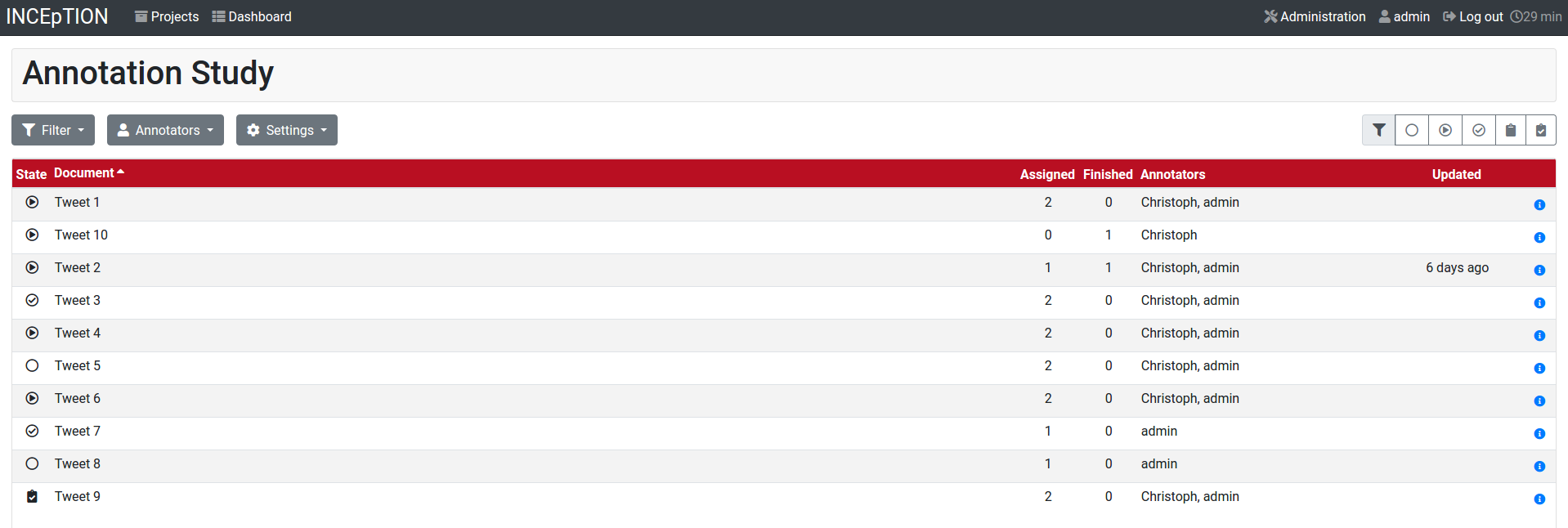

Workload

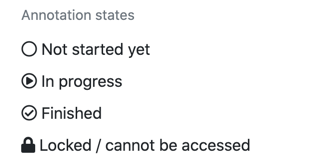

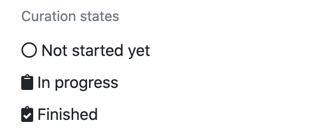

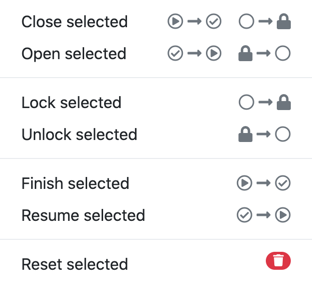

Here you can check the overall progress of your project; see which user is working on or has finished which document; and toggle for each user the status of each document between Done / In Progress or between New / Locked. For details, see Workload Management in the main documentation.

Evaluation

The evaluation page shows a learning curve diagram of each recommender (see Recommender).

Settings

Here, you can organize, manage and adjust all the details of your project. We had a look at those you need to get started for your own projects in the section Project Settings already.

This was the overview on what you can do in each project and what elements each project has. Now you are ready to go for your own annotations.

First Annotations with INCEpTION

In this section, we will make our first annotations. If you have not downloaded and imported an example project yet, we recommend to return to Download and import an Example Project and do so first. In this section, no or little theory and background will be explained. In case you want to have some theory and background knowledge first, we recommend reading the section Structure of an Annotation Project.

Create your first annotations

This will lead you step by step. You also may want to watch our tutorial video „Overview“ on how to create annotations. We will create a Named Entity annotation which tells whether a mention is a person (PER), location (LOC), organization (ORG) or other (OTH):

| Creating your own Projects: In this guide, we will use our example project. If you would like to create your own project later on, click on create, enter a project name and click on save. Use the Projects link at the top of the screen to return to the project overview and select the project you just created to work with it. See Project Settings in order to add documents, users, guidelines and more to your project. |

Step 1 - Opening a Project: After logging in, what you see first is the Project overview. Here, you can see all the projects which you have access to. Right now, this will be only the example project. Choose the example project by clicking on its name and you will be on the Dashboard of this project.

|

Instructions to Example Projects: In case of the example project, on the dashboard you also find instructions how to use it.

This goes for all our example projects.

You may use it instead of or in addition to the next steps of this guide. In case of your own projects, you will find the description you have given it instead. |

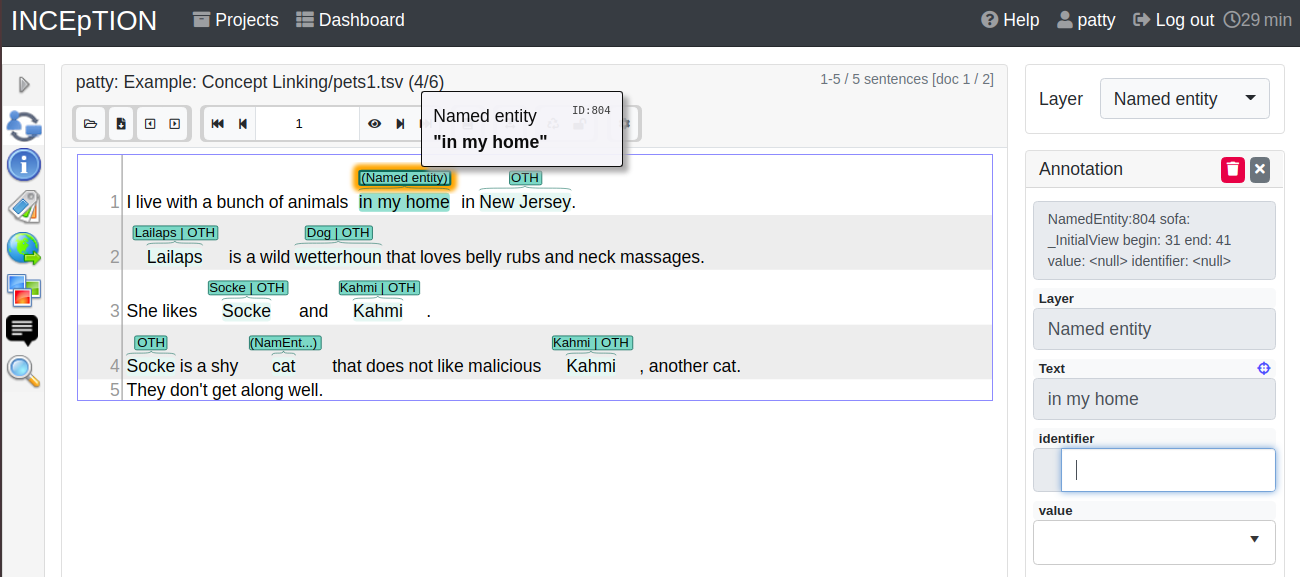

Step 2 - Open the Annotation Page: In order to annotate, click on Annotation on the top left. You will be asked to open the document which you want to annotate. For this guide, choose pets1.tsv.

| Annotations in newly imported Projects: In the example project, you will see several annotations already. If you import projects or single documents (see Documents) without any annotations, there will be none. But in the example projects, we have added some annotations already as examples. If you export a project (see Export) and import it again (as we just did with the example project in Download and import an Example Project), there will be the same annotations as before. |

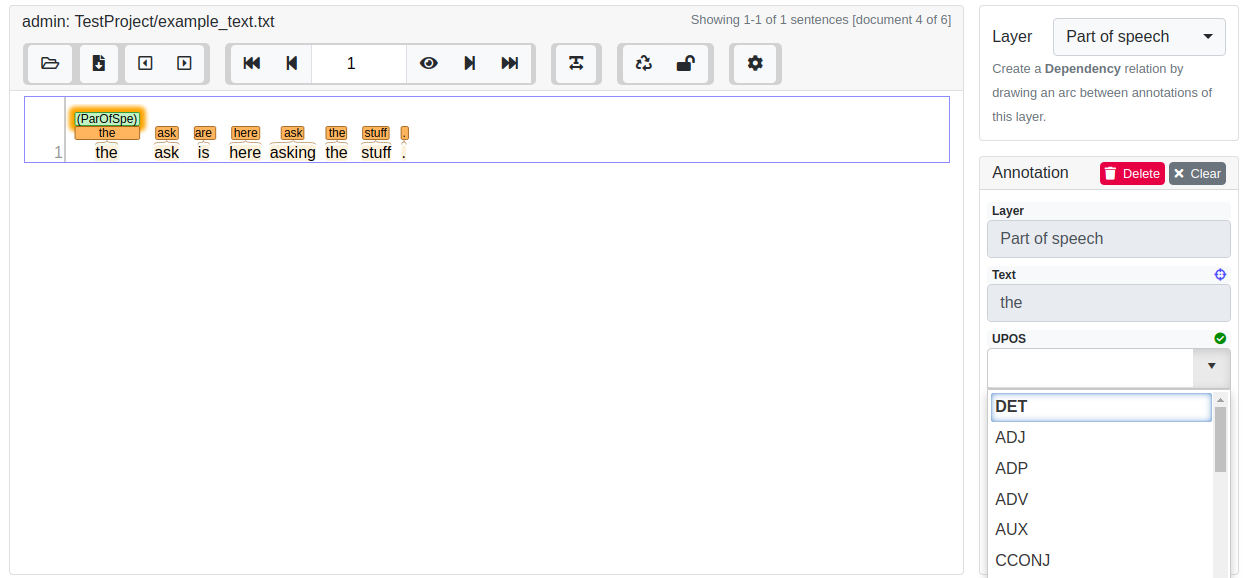

Step 3 - Create an Annotation: After opening the document, select Named entity from the Layer dropdown menu on the right side of the screen to create your first annotation. Then, use the mouse to select a word in the annotation area, e.g. in my home in line one. When you release the mouse button, the annotation will immediately be created and you can edit its details in the right sidebar (see next paragraph). These “details” are the features we mentioned before.

Note: All annotations will be saved automatically without clicking an extra save-button.

Congratulations, you have created your first annotation!

Now, let‘s examine the right panel to edit the details or to be precise: the features. You find the panel named Layer on top and Annotation below.

In the Layer-dropdown, you can choose the layer you want to annotate with as we just did. You always have to choose it before you make a new annotation. After an annotation has been created, its layer cannot be changed any more. In order to change it, you need to delete it, select the right layer and create a new annotation.

If you are not sure what layers are, check the box on Layers and Features in the section Project Settings. In order to learn how to adjust and create them for your purpose, see section Layers in the main documentation.

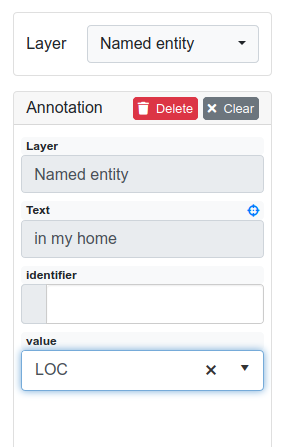

In the Annotation panel, you see the details of a selected annotation. They are called features.

It shows the layer the annotation is made in (field Layer; here: Named entity) and what part of the text has been annotated (field Text; here in my home).

Below, you can see and modify what has been entered for each of the so-called Features.

If you are not sure what features are, check the box on Layers and Features in the section Project Settings (Here: The layer Named entity (see the note box on Named Entity) has the features identifier and value.

The identifier tells, to which entity in the knowledge base the annotated text refers to.

For example, in case the home referred to here is a location the knowledge base knows, you can choose it in the dropdown of this field.

The value tells if it is a Location (LOC) like here, a Person (PER), Organization (ORG) or any other (OTH).).

You may enter free text here or work with tagsets to have a well defined set of labels to enter so all of the users within one project will use the same labels.

You can modify and create tagsets in the project settings.

See section Tagsets in Getting Started or check the main documentation for Tagsets.

You have almost finished the Getting Started. One word can still be said about the Sidebars on the left. These offer access to various additional functionalities such as an annotation overview, search, recommenders, etc. Which functionalities are available to you is determined by the project settings. The sidebars can be opened by clicking on one of the sidebar icons and they can be closed by clicking on the arrow icon at the top.

There are several features you might want to check the main documentation for. Especially the Recommender section of the sidebar (the black speech bubble) is worth a look in case you use recommenders (see Recommenders in the section Project Settings). Amongst others, you will find their measures and learning behaviours here. Also note the Search in the sidebar (the magnifier glass): You can create or delete annotations on all or some of the search results.

To get familiar with INCEpTION, you may want to follow the instructions for other example-projects, read more in-depth explanations on its Core Functionalities or explore INCEpTION yourself, learning by doing.

One way or the other: Have fun exploring!

Thank You

We hope the Getting Started helped you with your first steps in INCEpTION and gave you a general idea of how it works. For further reading and more details, we recommend the main documentation, starting right after this paragraph.

Do not hesitate to contact us if you struggle, have any questions or special requirements. We wish you success with your projects and you are welcome to let us know what you are working on.

Core functionalities

Workflow

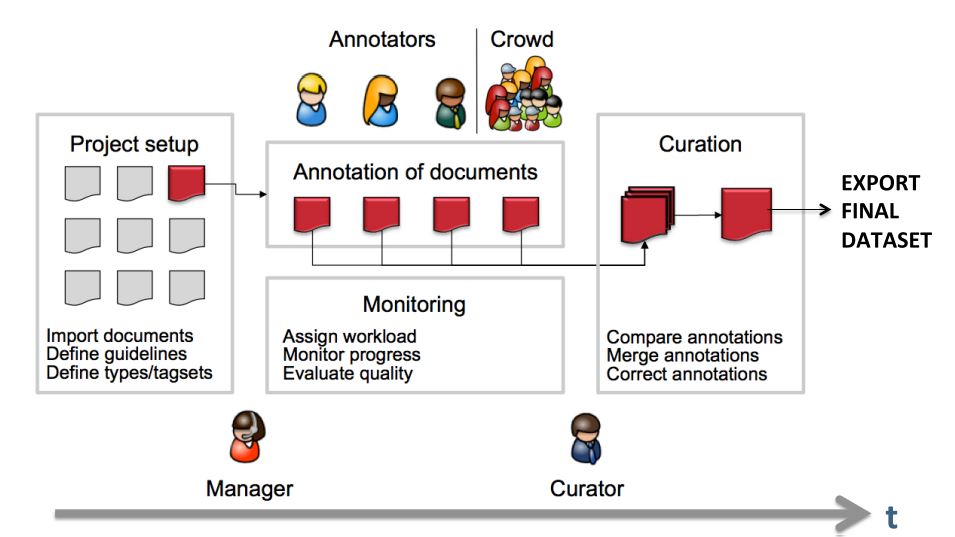

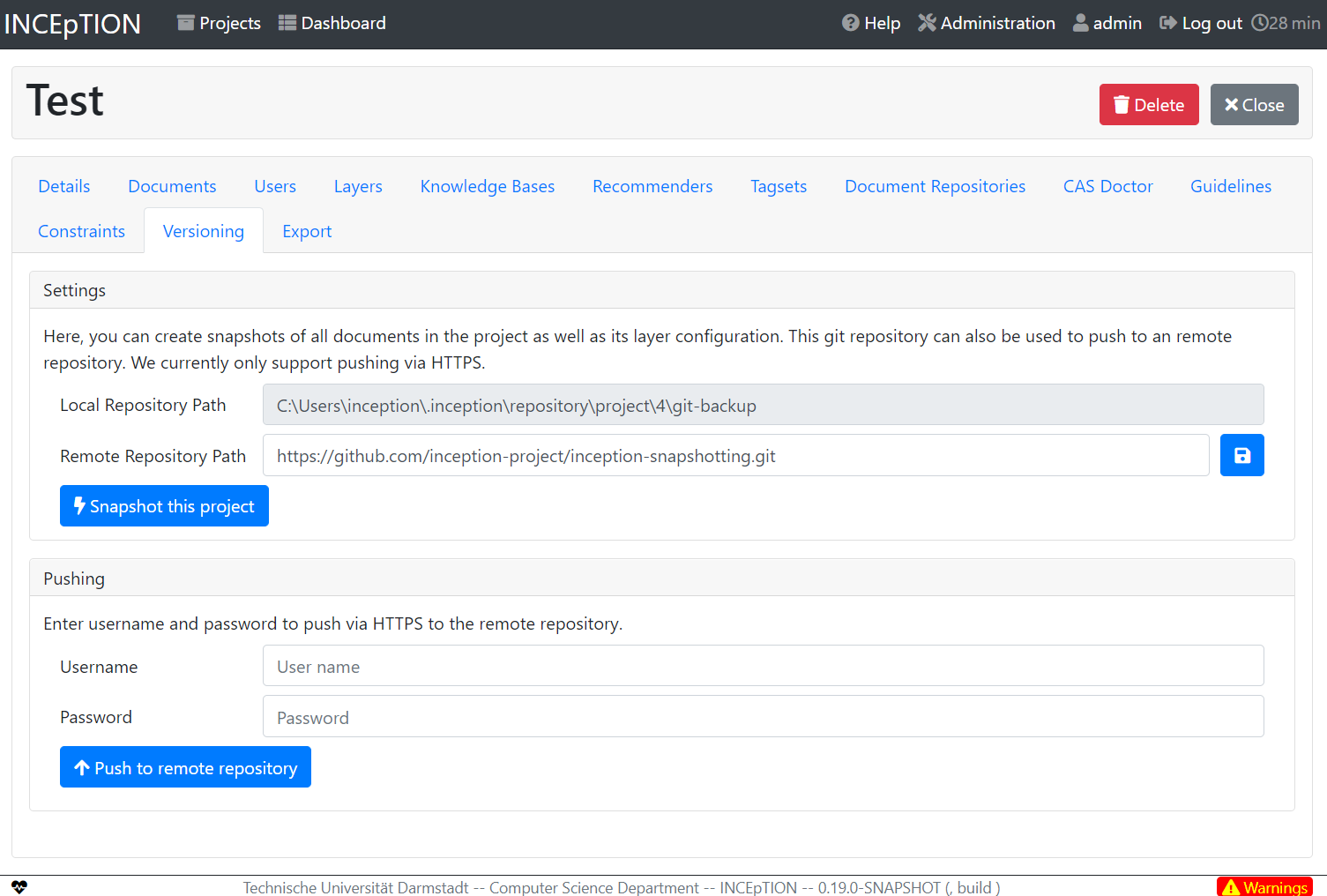

The following image shows an exemplary workflow of an annotation project with INCEpTION.

First, the projects need to be set up. In more detail, this means that users are to be added, guidelines need to be provided, documents have to be uploaded, tagsets need to be defined and uploaded, etc. The process of setting up and managing a project are explicitly described in Projects.

After the setup of a project, the users who were assigned with the task of annotation annotate the documents according to the guidelines. The task of annotation is further explained in Annotation. The work of the annotators is managed and controlled by monitoring. Here, the person in charge has to assign the workload. For example, in order to prevent redundant annotation, documents which are already annotated by several other annotators and need not be annotated by another person, can be blocked for others. The person in charge is also able to follow the progress of individual annotators. All these tasks are demonstrated in Workload Management in more detail. The person in charge should not only control the quantity, but also the quality of annotation by looking closer into the annotations of individual annotators. This can be done by logging in with the credentials of the annotators.

After at least two annotators have finished the annotation of the same document by clicking on Done, the curator can start his work. The curator compares the annotations and corrects them if needed. This task is further explained in Curation.

The document merged by the curator can be exported as soon as the curator clicked on Done for the document. The extraction of curated documents is also explained in Projects.

Logging in

Upon opening the application in the browser, the login screen opens. Please enter your credentials to proceed.

| When INCEpTION is started for the first time, a default user called admin with the password admin is automatically created. Be sure to change the password for this user after logging in (see User Management). |

Dashboard

The dashboard allows you to navigate the functionalities of INCEpTION.

Menu bar

At the top of the screen, there is always a menu bar visible which allows a quick navigation within the application. It offers the following items:

-

Projects - always takes you back to the Project overview.

-

Dashboard - is only visible if it is possible to take you to your last visited Project dashboard.

-

Help - opens the integrated help system in a new browser window.

-

Administration - takes you to the administrator dashboard which allows configuring projects or managing users. This item is only available to administrators.

-

Username - shows the name of the user currently logged in. If the administrator has allowed it, this is a link which allows accessing the current user’s profile, e.g. to change the password.

-

Log out - logs out of the application.

-

Timer - shows the remaining time until the current session times out. When this happens, the browser is automatically redirected to the login page.

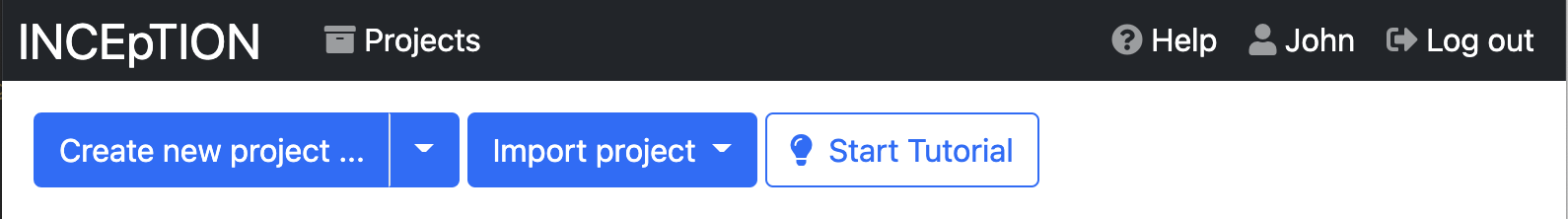

Project overview

After logging in to INCEpTION, the first thing you see is the project overview. Here, you can see all the projects to which you have access. For every project, the roles you have are shown.

Using the filter toggle buttons, you can select which projects are listed depending on the role that you have in them. By default, all projects are visible.

Users with the role project creator can conveniently create new projects or import project archives on this page.

Users without a manager role can leave a project by clicking on the Leave Project button below the project name.

When uploading projects via this page, user roles for the project are not fully imported! If the importing user has the role project creator, then the manager role is added for the importing user. Otherwise, only the roles of the importing user are retained.

If the current instance has users with the same name as those who originally worked on the import project, the manager can add these users to the project and they can access their annotations. Otherwise, only the imported source documents are accessible.

Users with the role administrator who wish to import projects with all permissions and optionally create missing users have to do this through the Projects which can be access through the Administration link in the menu bar.

Project dashboard

Once you have selected a project from the Project overview, you are taken to this project’s dashboard. Depending on the roles that a user has in the project, different functionalities can be accessed from here such as annotation, curation and project configuration. On the right-hand side of the page, some of the last activities of the user in this project are shown. The user can click on an activity to resume it e.g. if the user annotated a specific document, the annotation page will be opened on this document.

Annotation

| This functionality is only available to annotators and managers. Annotators and managers only see projects in which they hold the respective roles. |

The annotation screen allows to view text documents and to annotate them.

In addition to the default annotation view, PDF documents can be viewed and annotated using the PDF-Editor. Please refer to PDF Annotation Editor for an explanation on navigating and annotating in the PDF-view.

Opening a Document for Annotation

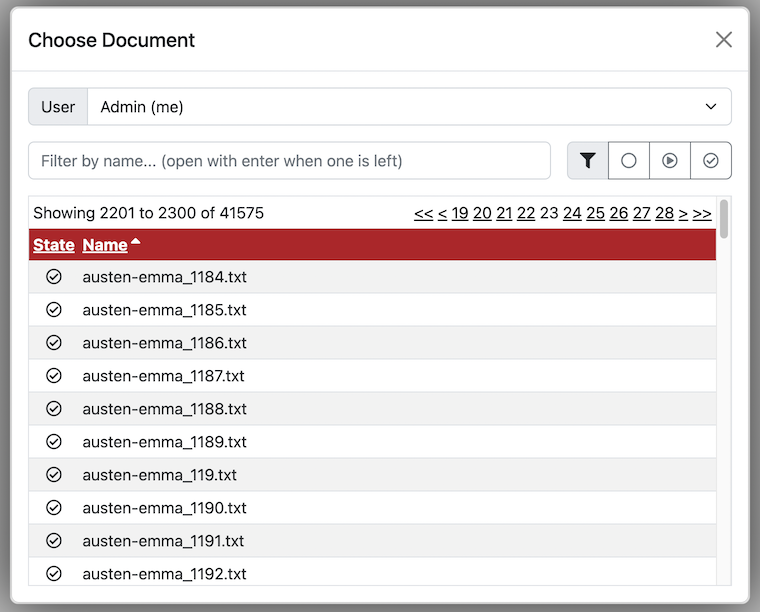

When navigating to the Annotation page, a dialogue opens that allows you to select the document you want to annotate. If you want to access this dialog later, use the Open button in the action bar.

The keyboard focus is automatically placed into the search field when the dialog opens. You can use it to conveniently search for documents by name. The table below is automatically filtered according to your input. If only one document is left, you can press ENTER to open it. Otherwise, you can click on a document in the table to open it. The Filter buttons allow to filter the table by document state.

Users that are managers can additionally open other users' documents to view their annotations but cannot change them. This is down via the User dropdown menu. The user’s own name is listed at the top and marked (me).

Navigation

Sentence numbers on the left side of the annotation page show the exact sentence numbers in the document.

The arrow buttons first page, next page, previous page, last page, and go to page allow you to navigate accordingly.

The Prev. and Next buttons in the Document frame allow you to go to the previous or next document on your project list.

When an annotation is selected, there are additional arrow buttons in the right sidebar which can be used to navigate between annotations on the selected layer within the current document.

You can also use the following keyboard assignments in order to navigate only using your keyboard.

| Key | Action |

|---|---|

Home |

go to the start of the document |

End |

go to the end of the document |

Page-Down |

go to the next page, if not in the last page already |

Page-Up |

go to previous page, if not already in the first page |

Shift+Page-Down |

go to next document in project, if available |

Shift+Page-Up |

go to previous document in project, if available |

Shuft+Cursor-Left |

go to previous annotation on the current layer, if available |

Shift+Cursor-Right |

go to next annotation on the current layer, if available |

Shift+Delete |

delete the currently selected annotation |

Ctrl+End |

toggle document state (finished / in-progress) |

Creating annotations

The Layer box in the right sidebar shows the presently active layer span layer. To create a span annotation, select a span of text or double click on a word.

If a relation layer is defined on top of a span layer, clicking on a corresponding span annotation and dragging the mouse creates a relation annotation.

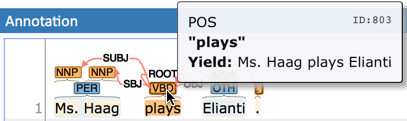

Once an annotation has been created or if an annotation is selected, the Annotation box shows the features of the annotation.

The definition of layers is covered in Section Layers.

Spans

To create an annotation over a span of text, click with the mouse on the text and drag the mouse to create a selection. When you release the mouse, the selected span is activated and highlighted in orange. The annotation detail editor is updated to display the text you have currently selected and to offer a choice on which layer the annotation is to be created. As soon as a layer has been selected, it is automatically assigned to the selected span. To delete an annotation, select a span and click on Delete. To deactivate a selected span, click on Clear.

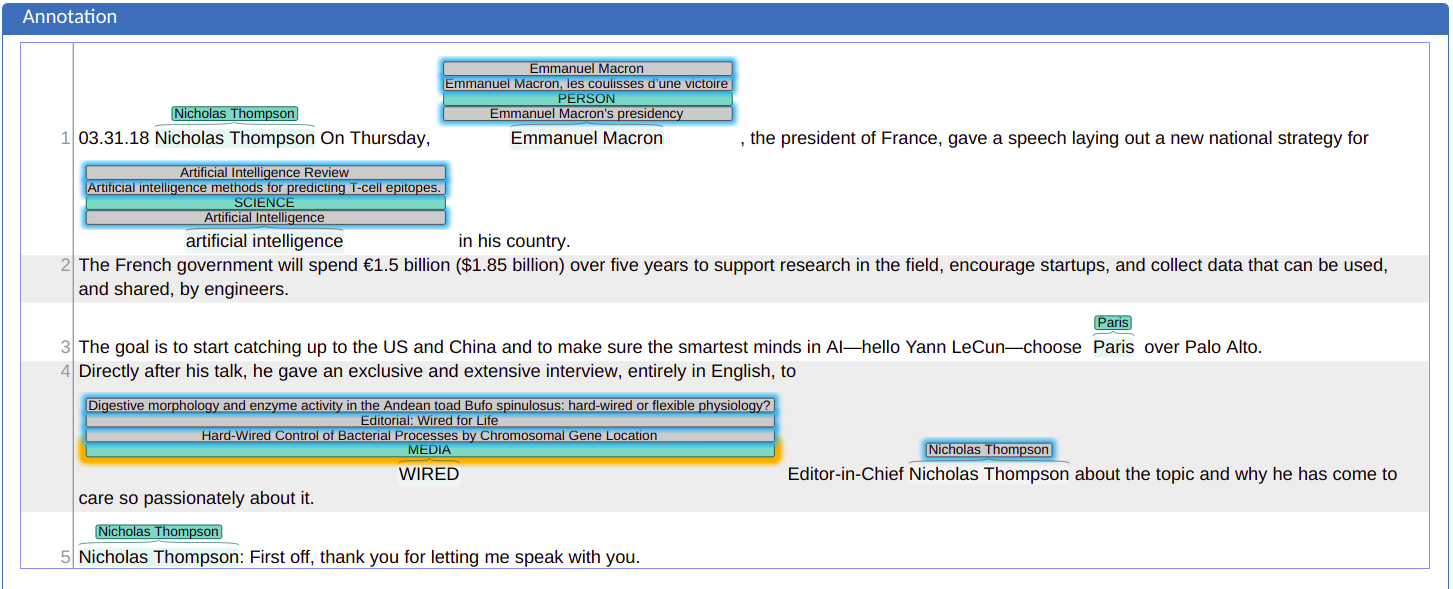

Depending on the layer behavior configuration, span annotations can have any length, can overlap, can stack, can nest, and can cross sentence boundaries.

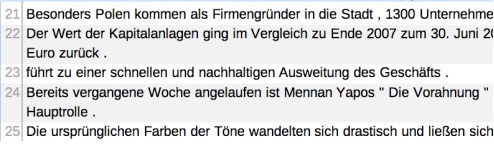

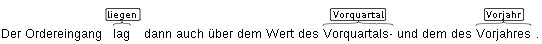

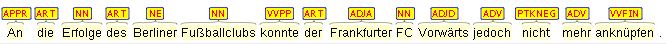

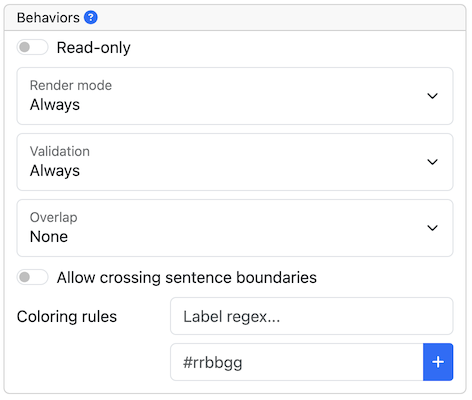

For example, for NE annotation, select the options as shown below (red check mark):

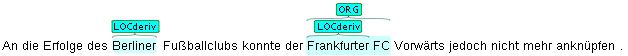

NE annotation can be chosen from a tagset and can span over several tokens within one sentence. Nested NE annotations are also possible (in the example below: "Frankfurter" in "Frankfurter FC").

Lemma annotation, as shown below, is freely selectable over a single token.

POS can be chosen over one token out of a tagset.

To create a zero-length annotation, hold Shift and click on the position where you wish to create the annotation. To avoid accidental creations of zero-length annotations, a simple single-click triggers no action by default. The lock to token behavior cancels the ability to create zero-length annotations.

A zero-width span between two tokens that are directly adjacent, e.g. the full stop at the

end of a sentence and the token before it (end.) is always considered to be at the end of the

first token rather than at the beginning of the next token. So an annotation between d and .

in this example would be rendered at the right side of end rather than at the left side of ..

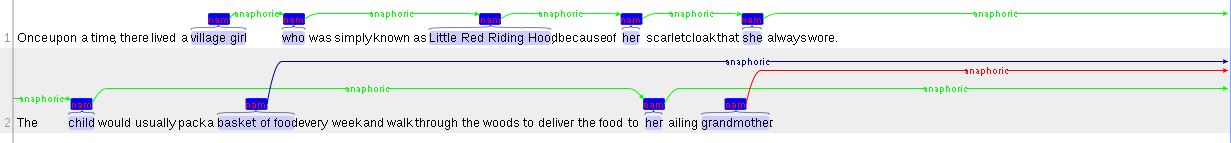

|

Co-reference annotation can be made over several tokens within one sentence. A single token sequence can have several co-ref spans simultaneously.

Relations

In order to create relation annotation, a corresponding relation layer needs to be defined and attached to the span layer you want to connect the relations to. An example of a relation layer is the built-in Dependency relation layer which connects to the Part of speech span layer, so you can create relations immediately on the Part of speech layer to try it out.

If you want to create relations on other span layers, you need to create a new layer of type Relation in the layer settings. Attach the new relation layer to a span layer. Note that only a single relation layer can connect to any given span layer.

Then you can start connecting the source and target annotations using relations.

There are two ways of creating a relation:

-

for short-distance relations, you can conveniently create relation by left-clicking on a span and while keeping the mouse button pressed moving the cursor over to the target span. A rubber-band arc is shown during this drag-and-drop operation to indicate the location of the relation. To abort the creation of an annotation, hold the CTRL key when you release the mouse button.

-

for long-distance relations, first select the source span annotation. Then locate the target annotation. You can scroll around or even switch to another page of the same document - just make sure that your source span stays selected in the annotation detail editor panel on the right. Once you have located the target span, right-click on it and select Link to…. Mind that long-ranging relations may not be visible as arcs unless both the source and target spans are simultaneously visible (i.e. on the same "page" of the document). So you may have to increase the number of visible rows in the settings dialog to make them visible.

When a relation annotation is selected, the annotation detail panel includes two fields From and To which indicate the origin and target annotations of the relation. These fields include a small cross-hair icon which can be used to jump to the respective annotations.

When a span annotation is selected, and incoming or outgoing relations are also shown in the annotation detail panel. Here, the cross-hair icon can be used to jump to the other endpoint of the relation (i.e. to the other span annotation). There is also an icon indicating whether the relation is incoming to the selected span annotation or whether it is outgoing from the current span. Clicking on this icon will select the relation annotation itself.

Depending on the layer behavior configuration, relation annotations can stack, can cross each other, and can cross sentence boundaries.

To create a relation from a span to itself, press the Shift key before starting to drag the mouse and hold it until you release the mouse button. Or alternatively select the span and then right-click on it and select Link to….

| Currently, there can be at most one relation layer per span layer. Relations between spans of different layers are not supported. |

| Not all arcs displayed in the annotation view are belonging to chain or relation layers. Some are induced by Link Features. |

When moving the mouse over an annotation with outgoing relations, the info pop-up includes the yield of the relations. This is the text transitively covered by the outgoing relations. This is useful e.g. in order to see all text governed the head of a particular dependency relation. The text may be abbreviated.

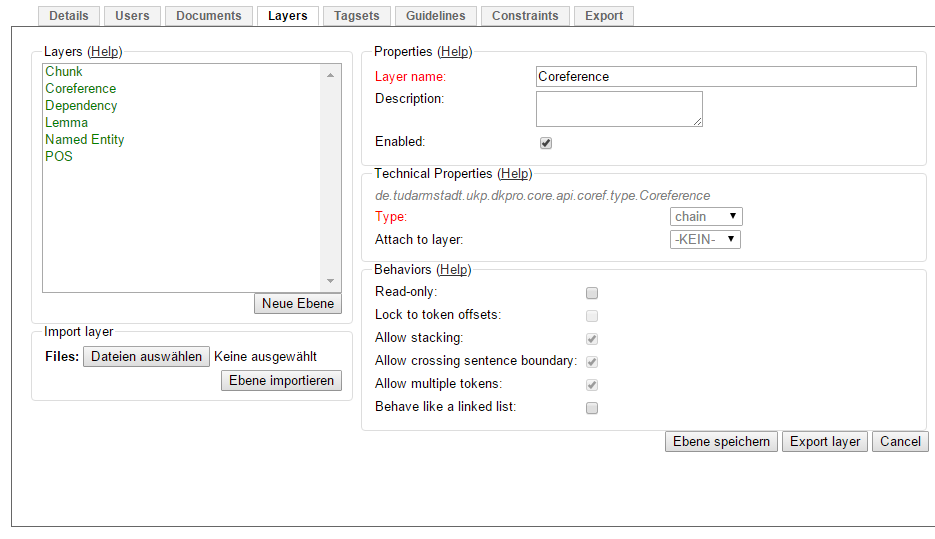

Chains

A chain layer includes both, span and relation annotations, into a single structural layer. Creating a span annotation in a chain layer basically creates a chain of length one. Creating a relation between two chain elements has different effects depending on whether the linked list behavior is enabled for the chain layer or not. To enable or disable the linked list behaviour, go to Layers in the Projects Settings mode. After choosing Coreference, linked list behaviour is displayed in the checkbox and can either be marked or unmarked.

To abort the creation of an annotation, hold CTRL when you release the mouse button.

| Linked List | Condition | Result |

|---|---|---|

disabled |

the two spans are already in the same chain |

nothing happens |

disabled |

the two spans are in different chains |

the two chains are merged |

enabled |

the two spans are already in the same chains |

the chain will be re-linked such that a chain link points from the source to the target span, potentially creating new chains in the process. |

enabled |

the two spans are in different chains |

the chains will be re-linked such that a chain link points from the source to the target span, merging the two chains and potentially creating new chains from the remaining prefix and suffix of the original chains. |

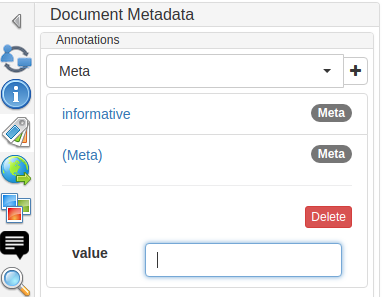

🧪 Document metadata

Experimental feature. To use this functionality, you need to enable it first by adding documentmetadata.enabled=true to the settings.properties file (see the Admin Guide).

|

Curation of document metadata annotations is not possible. Import and export of document metadata annotations is only supported in the UIMA CAS formats, but not in WebAnno TSV.

Before being able to configure document-level annotations, you need to define an annotation layer of type Document metadata on the project Settings, Layers tab. For this:

-

Go to Settings → Layers and click the Create button

-

Enter a name for the annotation layer (e.g.

Author) and set its type to Document metadata -

Click Save

-

On the right side of the page, you can now configure features for this annotation layer by clicking Create

-

Again, choose a name and type for the feature e.g.

nameof type Primitive: String -

Click Save

On the annotation page, you can now:

-

Open the Document Metadata sidebar (the tags icon) and

-

Choose the newly created annotation layer in the dropdown.

-

Clicking the plus sign will add a new annotation whose feature you can fill in.

Singletons

If you want to define a document metadata layer for which each document should have exactly one annotation, then you can mark the layer as a singleton. This means that in every document, an annotation of this type is automatically created when the annotator opens the document. It is immediately accessible via the document metadata sidebar - the annotator does not have to create it first. Also, the singleton annotation cannot be deleted.

Primitive Features

Supported primitive features types are string, boolean, integer, and float.

String features without a tagset are displayed using a text field or a text area with multiple rows. If multiple rows are enabled it can either be dynamically sized or a size for collapsing and expanding can be configured. The multiple rows, non-dynamic text area can be expanded if focused and collapses again if focus is lost.

In case the string feature has a tagset, it instead appears as a radio group, a combobox, or an auto-complete field - depending on how many tags are in the tagset or whether a particular editor type has been chosen.

There is also the option to have multi-valued string features. These are displayed as a multi-value select field and can be used with or without an associated tagset. Keyboard shortcuts are not supported.

Boolean features are displayed as a checkbox that can either be marked or unmarked.

Integer and float features are displayed using a number field. However if an integer feature is limited and the difference between the maximum and minimum is lower than 12 it can also be displayed with a radio button group instead.

Link Features

Link features can be used to link one annotation to others. Before a link can be made, a slot must be added. If role labels are enabled enter the role label in the text field and press the add button to create the slot. Next, click on field in the newly created slot to arm it. The field’s color will change to indicate that it is armed. Now you can fill the slot by double-clicking on a span annotation. To remove a slot, arm it and then press the del button.

Once a slot has been filled, there is a cross-hair icon in the slot field header which can be used to navigate to the slot filler.

When a span annotation is selected which acts as a slot filler in any link feature, then the annotation owning the slow is shown in the annotation detail panel. Here, the cross-hair icon can be used to jump to the slot owner.

If role labels are enabled they can be changed by the user at any time. To change a previously selected role label, no prior deletion is needed. Just click on the slot you want to change, it will be highlighted in orange, and chose another role label.

If there is a large number of tags in the tagset associated with the link feature, the the role

combobox is replaced with an auto-complete field. The difference is that in the auto-complete field, there is no button to open the dropdown to select a role. Instead, you can press space or use the cursor-down keys to cause the dropdown menu for the role to open. Also, the dropdown only shows up

to a configurable maximum of matching tags. You can type in something (e.g. action) to filter for

items containing action. The threshold for displaying an auto-complete field and the maximum number

of tags shown in the dropdown can be configured globally. The settings are documented in the

administrators guide.

If role labels are disabled for the link feature layer they cannot be manually set by the user. Instead the UI label of the linked annotation is displayed.

Image Features

Image URL features can be used to link a piece of text to an image. The image must be accessible via an URL. When the use edits an annotation, the URL is displayed it the feature editor. The actual images can be viewed via the image sidebar.

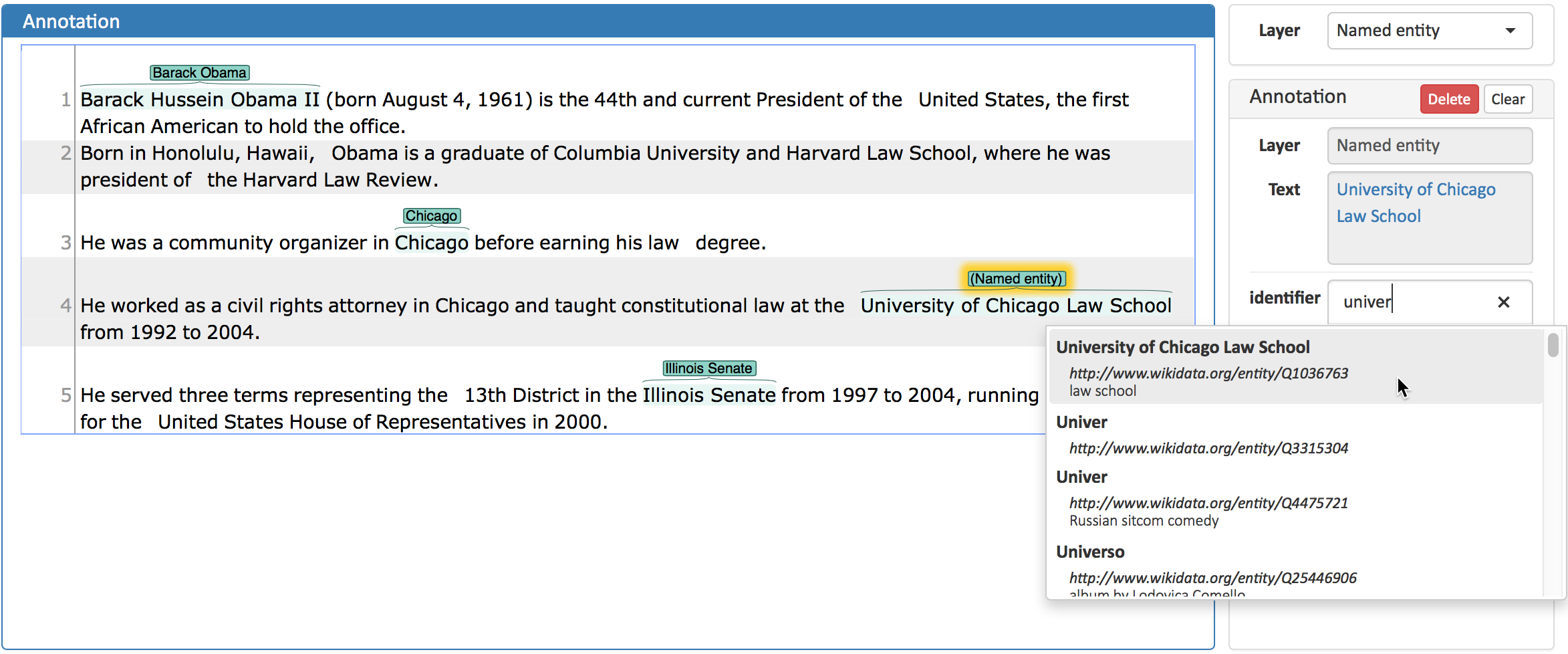

Concept features

Concept features allow linking an annotation to a concept (class, instance, property) from a knowledge base.

There are two types of concept features: single-value and multi-value. A single value feature can only link an annotation to a single concept. The single-value feature is displayed using an auto-complete field. When a concept has been linked, its description is shown below the auto-complete field. A multi-value concept feature allows linking the annotation up to more than one concept. It is shown as a multi-select auto-complete field. When hovering with the mouse over one of the linked concepts, its description is displayed as a tooltip.

Typing into the field triggers a query against the knowledge base and displays candidates in a dropdown list. The query takes into account not only what is typed into the input field, but also the annotated text.

| Just press SPACEBAR instead of writing anything into the field to search the knowledge base for concepts matching the annotated text. |

The query entered into the field only matches against the label of the knowledge base items, not

against their description. However, you can filter the candidates by their description. E.g. if you

wish to find all knowledge base items with Obama in the label and president in the description,

then you can write Obama :: president. A case-insensitive matching is being used.

If the knowledge base is configured for additional matching properties and the value entered into the field matches such an additional property, then the label property will be shown separately in the dropdown. In this case, filtering does not only apply to the description but also to the canonical label.

Depending on the knowledge base and full-text mode being used, there may be fuzzy matching. To

filter the candidate list down to those candidates which contain a particular substring, put

double quotes around the query, e.g. "County Carlow". A case-insensitive matching is being used.

You can enter a full concept IRI to directly link to a particular concept. Note that searching by IRIs by substrings or short forms is not possible. The entire IRI as used by the knowledge base must be entered. This allows linking to concepts which have no label - however, it is quite inconvenient. It is much more convenient if you can ensure that your knowledge base offers labels for all its concepts.

The number of results displayed in the dropdown is limited. If you do not find what you are looking for, try typing in a longer search string. If you know the IRI of the concept you are looking for, try entering the IRI. Some knowledge bases (e.g. Wikidata) are not making a proper distinction between classes and instances. Try configuring the Allowed values in the feature settings to any to compensate.

Instead of searching a concept using the auto-complete field, you can also browse the knowledge base. However, this is only possible if:

-

the concept feature is bound to a specific knowledge base or the project contains only a single knowledge base;

-

the concept feature allowed values setting is not set to properties.

Note that only concept and instances can be linked, not properties - even if the allowed values setting is set to any.

Annotation Sidebar

The annotation sidebar provides an overview over all annotations in the current document. It is located in the left sidebar panel.

The sidebar supports two modes of displaying annotations:

-

Grouped by label (default): Annotations are grouped by label. Every annotation is represented by its text. If the same text is annotated multiple times, there will be multiple items with the same text. To help disambiguating between the same text occurring in different contexts, each item also shows a bit of trailing context in a lighter font. Additionally, there is a small badge in every annotation item which allows selecting the annotation or deleting it. Clicking on the text itself will scroll to the annotation in the editor window, but it will not select the annotation. If an item represents an annotation suggestion from a recommender, the badge instead has buttons for accepting or rejecting the suggestion. Again, clicking on the text will scroll the editor window to the suggestion without accepting or rejecting it. Within each group, annotations are sorted alphabetically by their text. If the option sort by score is enabled, then suggestions are sorted by score.

-

Grouped by position: In this mode, the items are ordered by their position in the text. Relation annotations are grouped under their respective source span annotation. If there are multiple annotations at the same position, then there are multiple badges in the respective item. Each of these badges shows the label of an annotation present at this position and allows selecting or deleting it. Clicking on the text will will scroll to the respective position in the editor window.

Undo/re-do

The undo/re-do buttons in the action bar allow to undo annotation actions or to re-do an an undone action.

This functionality is only available while working on a particular document. When switching to another document, the undo/redo history is reset.

| Key | Action |

|---|---|

Ctrl-Z |

undo last action |

Shift-Ctrl-Z |

re-do last un-done action |

| Not all actions can be undone or redone. E.g. bulk actions are not supported. While the undoing the creation of chain span and chain link annotations is supported, re-doing these actions or undoing their deletions is not supported. |

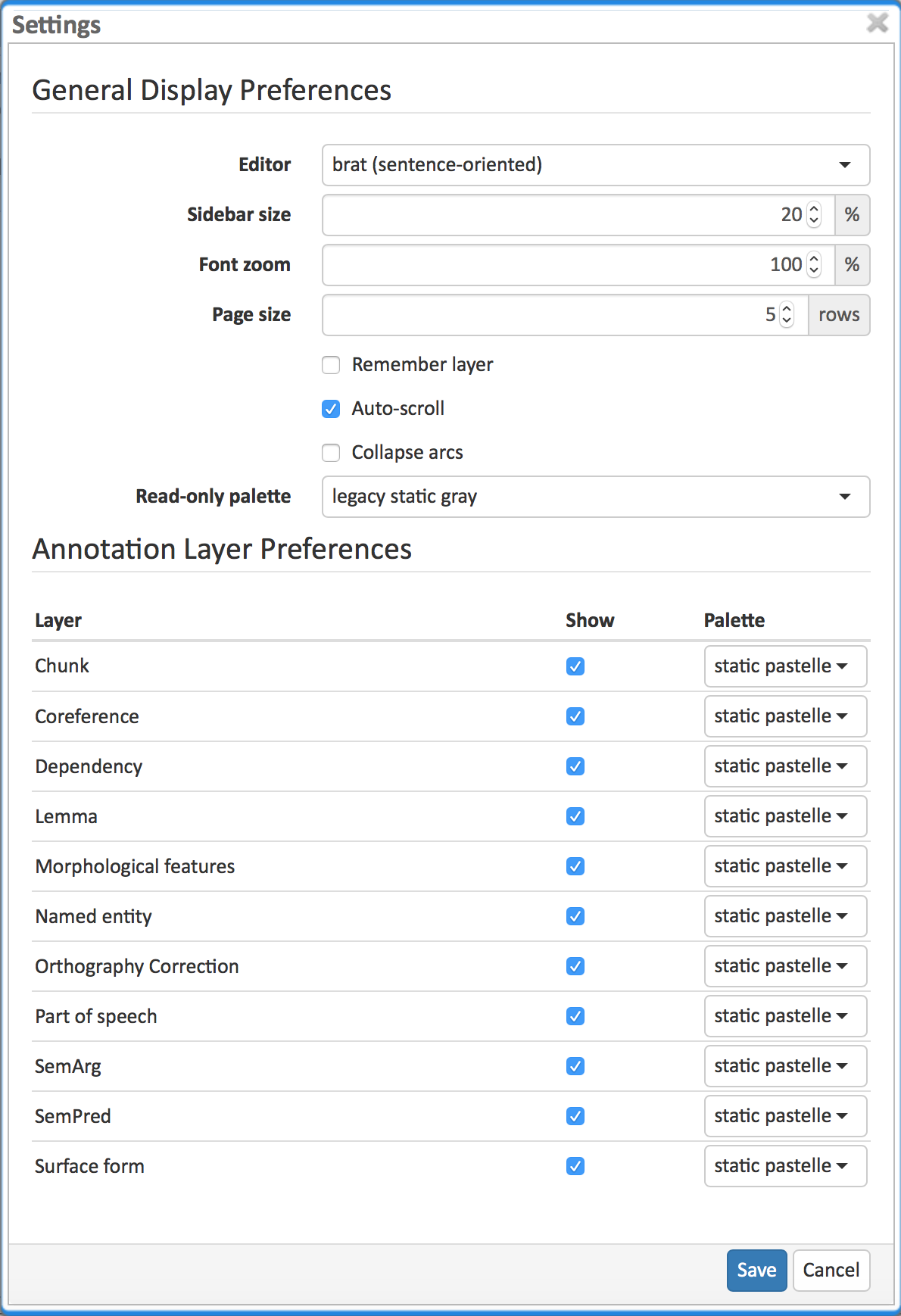

Settings

Once the document is opened, a default of 5 sentences is loaded on the annotation page. The Settings button will allow you to specify the settings of the annotation layer.

The Editor setting can be used to switch between different modes of presentation. It is currently only available on the annotation page.

The Sidebar size controls the width of the sidebar containing the annotation detail editor and actions box. In particular on small screens, increasing this can be useful. The sidebar can be configured to take between 10% and 50% of the screen.

The Font zoom setting controls the font size in the annotation area. This setting may not apply to all editors.

The Page size controls how many sentences are visible in the annotation area. The more sentences are visible, the slower the user interface will react. This setting may not apply to all editors.

The Auto-scroll setting controls if the annotation view is centered on the sentence in which the last annotation was made. This can be useful to avoid manual navigation. This setting may not apply to all editors.

The Collapse arcs setting controls whether long ranging relations can be collapsed to save space on screen. This setting may not apply to all editors.

The Read-only palette controls the coloring of annotations on read-only layers. This setting overrides any per-layer preferences.

Layer preferences

In this section you can select which annotation layers are displayed during annotation and how they are displayed.

Hiding layers is useful to reduce clutter if there are many annotation layers. Mind that hiding a layer which has relations attached to it will also hide the respective relations. E.g. if you disable POS, then no dependency relations will be visible anymore.

The Palette setting for each layer controls how the layer is colored. There are the following options:

-

static / static pastelle - all annotations receive the same color

-

dynamic / dynamic pastelle - all annotations with the same label receive the same color. Note that this does not imply that annotations with different labels receive different colors.

-

static grey - all annotations are grey.

Mind that there is a limited number of colors such that eventually colors will be reused. Annotations on chain layers always receive one color per chain.

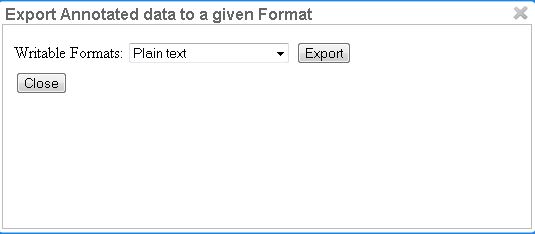

Export

Annotations are always immediately persistent in the backend database. Thus, it is not necessary to save the annotations explicitly. Also, losing the connection through network issues or timeouts does not cause data loss. To obtain a local copy of the current document, click on export button. The following frame will appear:

Choose your preferred format. Please take note of the facts that the plain text format does not contain any annotations and that the files in the binary format need to be unpacked before further usage. For further information the supported formats, please consult the corresponding chapter Formats.

The document will be saved to your local disk, and can be re-imported via adding the document to a project by a project manager. Please export your data periodically, at least when finishing a document or not continuing annotations for an extended period of time.

Search

The search module allows to search for words, passages and annotations made in the documents of a given project. Currently, the default search is provided by MTAS (Multi Tier Annotation Search), a Lucene/Solr based search and indexing mechanism (https://github.com/textexploration/mtas).

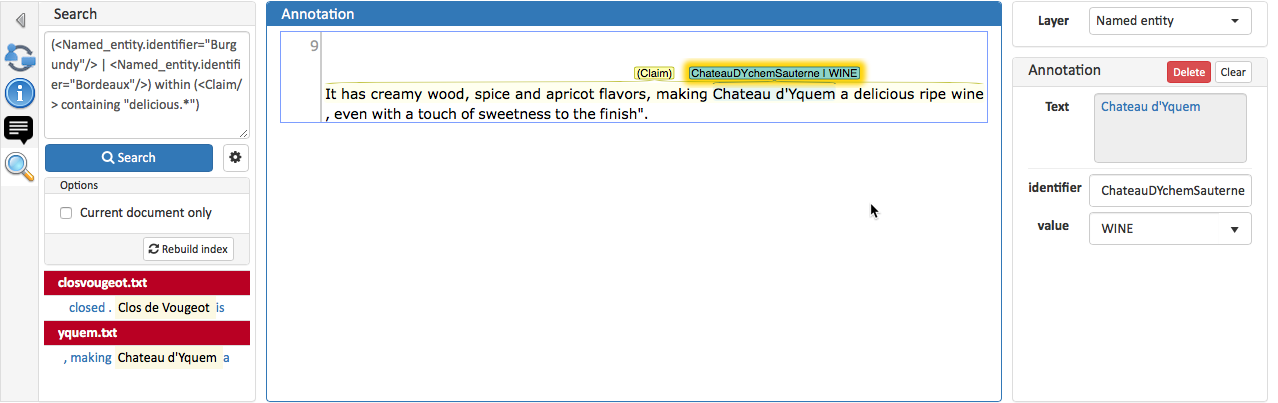

To perform a search, access the search sidebar located at the left of the screen, write a query and press the Search button. The results are shown below the query in a KWIC (keyword in context) style grouped by document. Clicking on a result will open the match in the main annotation editor.

The search only considers documents in the current project and only matches annotations made by the current user.

| Very long annotations and tokens (longer than several thousand characters) are not indexed and cannot be found by the search. |

Clicking on the search settings button (cog wheel) shows additional options:

-

Current document only limits the search to the document shown in the main annotation editor. When switching to another document, the result list does not change automatically - the search button needs to be pressed again in order to show results from the new document.

-

Rebuild index may help fixing search issues (e.g. no or only partial results), in particular after upgrading to a new version of INCEpTION. Note that this process may take quite some time depending on the number of documents in the project.

-

Grouping by allows to group the search results by feature values of the selected annotation feature. By default the search results will be grouped by document title if no layer and no feature is selected.

-

Low level paging will apply paging of search results directly at query level. This means only the next n results are fetched every time a user switches to a new page of results (where n is the page size). Thus the total number of results for a result group is unknown. This option should be activated if a query is expected to yield a very large number of results so that fetching all results at once would slow down the application too much. + This option can only be activated if results are being grouped by document.

Creating/Deleting Annotations for Search Results

The user can also use the search to create and/or delete annotations for a set of selected search results.

This means that annotations will be created/deleted at the token offsets of the selected search results. Search results can be selected via the checkbox on the left. Per default all search results are selected. If a result originates from a document which the user has already marked as finished, there is no checkbox since such documents cannot be modified anyway.

The currently selected annotation in the annotation editor serves as template for the annotations that are to be created/deleted. Note that selecting an annotation first is necessary for creating/deleting annotations in this way.

| The slots and slot fillers of slot features are not copied from the template to newly created annotations. |

Clicking on the create settings button (cog wheel) shows additional options:

-

Override existing will override an existing annotation of the same layer at a target location. If this option is disabled, annotations of the same layer will be stacked if stacking is enabled for this layer. Otherwise no annotation will be created.

Clicking on the delete settings button (cog wheel) shows additional options:

-

Delete only matching feature values will only delete annotations at search results that exactly match the currently selected annotation including all feature values. If this option is disabled all annotations with the same layer as the currently selected annotation will be deleted regardless of their feature values. Note that slot features are not taken into account when matching the selected annotation against candidates to be deleted.

Mtas search syntax

The INCEpTION Mtas search provider allows queries to be executed using CQL (Corpus Query Language), as shown in the following examples. More examples and information about CQL syntax can be found at https://meertensinstituut.github.io/mtas/search_cql.html.

When performing queries, the user must reference the annotation types using the layer names, as defined in the project schema. In the same way, the features must be referenced using their names as defined in the project schema. In both cases, empty spaces in the names must be replaced by an underscore.

Thus, Lemma refers to the Lemma layer, Lemma.Lemma refers to the the Lemma feature in the

Lemma layer. In the same way, Named_entity refers to Named entity layer, and

Named_entity.value refers to the value feature in the Named entity layer.

Annotations made over single tokens can be queried using the […] syntax, while annotations

made over multiple tokens must be queried using the <…/> syntax.

In the first case, the user must always provide a feature and a value. The following syntax returns all single token annotations of the LayerX layer whose FeatureX feature have the given value.

[LayerX.FeatureX="value"]

In the second case, the user may or not provide a feature and a value. Thus, the following syntax will return all multi-token annotations of the LayerX layer, regardless of their features and values.

<LayerX/>

On the other hand, the following syntax will return the multi-token annotations whose FeatureX feature has the given value.

<LayerX.FeatureX="value"/>

Notice that the multi-token query syntax can also be used to retrieve single token annotations (e.g. POS or lemma annotations).

Text queriess

Galicia

"Galicia"

The capital of Galicia

"The" "capital" "of" "Galicia"

Span layer queries

[Lemma.Lemma="sign"]

<Named_entity/>

<Named_entity.value="LOC"/>

[Lemma.Lemma="be"] [Lemma.Lemma="sign"]

"house" [POS.PosValue="VERB"]

[POS.PosValue="VERB"]<Named_entity/>

<Named_entity/>{2}

<Named_entity/> <Named_entity/>

<Named_entity/> [] <Named_entity/>

<Named_entity/> []? <Named_entity/>

<Named_entity/> []{2} <Named_entity/>

<Named_entity/> []{1,3} <Named_entity/>

(<Named_entity.value="OTH"/> | <Named_entity.value="LOC"/>)

[POS.PosValue="VERB"] within [Lemma.Lemma="sign"]

[POS.PosValue="DET"] within <Named_entity/>

[POS.PosValue="DET"] !within <Named_entity/>

<Named_entity/> containing [POS.PosValue="DET"]

<Named_entity/> !containing [POS.PosValue="DET"]

<Named_entity.value="LOC"/> intersecting <SemArg/>

(<Named_entity.value="OTH"/> | <Named_entity.value="LOC"/>) within <SemArg/>

(<Named_entity.value="OTH"/> | <Named_entity.value="LOC"/>) intersecting <SemArg/>

<s> []{0,50} <Named_entity.value="PER"/> []{0,50} </s> within <s/>

Relation layer queries

INCEpTION allows queries over relation annotations as well. When relations are indexed, they are indexed by the position of their target span. That entails that match highlighted in the query corresponds to text of the target of the relation.

For the following examples, we assume a span layer called component and a relation layer called rel attached to it. Both layers have a string feature called value.

<rel.value="foo"/>

<rel-source="foo"/>

<rel-target="foo"/>

<rel-source.value="foo"/>

<rel-target.value="foo"/>

<rel.value="bar"/> fullyalignedwith <rel-target.value="foo"/>

<rel.value="bar"/> fullyalignedwith (<rel-source.value="foo"/> fullyalignedwith <rel-target.value="foo"/>)

Concept feature queries

<KB-Entity="Bordeaux"/>

The following query returns all mentions of ChateauMorgonBeaujolais or any of its subclasses in the associated knowledge base.

<Named_entity.identifier="ChateauMorgonBeaujolais"/>

Mind that the label of a knowledge base item may be ambiguous, so it may be necessary to search by IRI.

<Named_entity.identifier="http://www.w3.org/TR/2003/PR-owl-guide-20031209/wine#ChateauMorgonBeaujolais"/>

<Named_entity.identifier-exact="ChateauMorgonBeaujolais"/>

(<Named_entity.identifier-exact="ChateauMorgonBeaujolais"/> | <Named_entity.identifier-exact="AmericanWine"/>)

Statistics

The statistics section provides useful statistics about the project. Currently, the statistics are provided by MTAS (Multi Tier Annotation Search), a Lucene/Solr based search and indexing mechanism (https://github.com/textexploration/mtas).

High-level statistics sidebar

To reach the statistics sidebar, go to Annotation, open a document and choose the statistics sidebar on the left, indicated by the clipboard icon. Select a granularity and a statistic which shall be displayed. After clicking the calculate button, the results are shown in the table below.

| Clicking the Calculate button will compute all statistics and all granularities at once. The dropdowns are just there to reduce the size of the table. Therefore, depending on the size of the project, clicking the calculate button may take a while . The exported file always contains all statistics, so it is significantly larger than the displayed table. |

For the calculation of the statistics, all documents which the current user has access to and all are considered. They are computed for all layer/feature combinations. Please make sure that the name of the layer/feature combinations are valid (e.g. they don’t contain incorrect bracketing).

-

Granularity: Currently, there are two options to choose from, per Document and per Sentence. Understanding what’s actually computed by them is illustrated best by an example. Assume you have 3 documents, the first with 1 sentence, the second with 2 sentences and the third with 3 sentences. Let Xi be the number of occurrences of feature X (e.g. the Feature "value" in the Layer "named entity") in document i (i = 1, 2, 3). Then per Document is just the vector Y = (X1, X2, X3), i.e. we look at the raw occurrences per Document. In contrast, per Sentence calculates the vector Z = (X1/1, X2/2, X3/3), i.e. it divides the number of occurrences by the number of sentences. This vector is then evaluated according to the chosen statistic (e.g. Mean(Y) = (X1 + X2 + X3)/3, Max(Z) = max(X1/1, X2/2, X3/3)).

-

Statistic: The kind of statistic which is displayed in the table. Let (Y1, …, Yn) be a vector of real numbers. Its values are calculated as shown in the Granularity section above.

-

Maximum: the greatest entry, i.e. max(Y1, …, Yn)

-

Mean: the arithmetic mean of the entries, i.e. (Y1 + … + Yn)/n

-

Median: the entry in the middle of the sorted vector, i.e. let Z = (Z1, …, Zn) be a vector which contains the same entries as Y, but they are in ascending order (Z1 < = Z2 < = … < = Zn). Then the median is given by Z(n+1)/2 if n is odd or (Zn/2 + Z(n/2)+1)/2 if n is even

-

Minimum: the smallest entry, i.e. min(Y1, …, Yn)

-

Number of Documents: the number of documents considered, i.e. n

-

Standard Deviation: 1/n * ( (Y1 - Mean(Y))2 + … + (Yn - Mean(Y))2)

-

Sum: the total number of occurrences across all documents, i.e. Y1 + … + Yn

-

| The two artificial features token and sentence are contained in the artificial layer Segmentation and statistics for them are computed. Note that per Sentence statistics of Segmentation.sentence are trivial so they are omitted from the table and the downloadable file. |

-

Hide empty layers: Usually, a project does not use all layers. If a feature of a layer does never occur, all its statistics (except Number of Documents) will be zero. Tick this box and press the Calculate button again to omit them from the displayed table. If you then download the table, the generated file will not include these layers.

After some data is displayed in the table, it is possible to download the results. For this, after clicking the Calculate button there will appear a Format Dropdown and an Export button below the table. Choose your format and click the button to start a download of the results. The download will always include all possible statistics and either all features or only the non-null features.

-

Formats: Currently, two formats are possible, .txt and .csv. In the first format, columns are separated by a tab "\t" whereas in the second format they are separated by a comma ",".

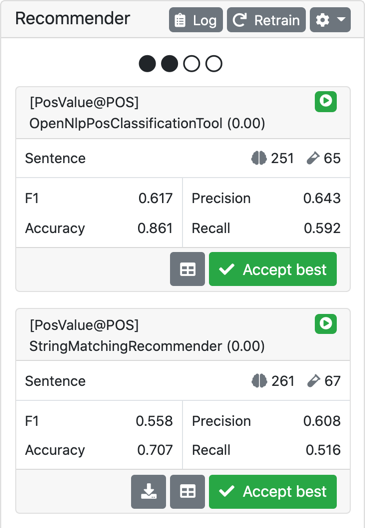

Recommenders

After configuring one or more recommender in the Project Settings, they can be used during annotation to generate predictions. In the annotation view, predictions are shown as grey bubbles. Predictions can be accepted by clicking once on them. In order to reject, use a double-click. For an example how recommendations look in action, please see the screenshot below.

Suggestions generated by a specific recommender can be deleted by removing the corresponding recommender in the Project Settings. Clicking Reset in the Workflow area will remove all predictions, however it will also remove all hand-made annotations.

Accept/reject buttons

Experimental feature. To use this functionality, you need to enable it first by adding recommender.action-buttons-enabled=true to the settings.properties file (see the Admin Guide).

|

It is possible to enable explicit Accept and Reject buttons in the annotation interface. These appear left and right of the suggestion marker as the mouse hovers over the marker.

Recommender Sidebar

Clicking the chart icon in the left sidebar tray opens the recommendation sidebar which provides access to several functionalities:

- View the state of the configured recommenders

-

The icon in the top-right corner of the info box indicates the state of the recommender, e.g. if it is active, inactive, or if information on the recommender state is not yet available due to no self-evaluation or train/predict run having been completed yet.

- View the self-evaluation results of the recommenders

-

When evaluation results are available, the info box shows sizes of the training and evaluation data it uses for self-evaluation (for generating actual suggestions, the recommender is trained on all data), and the results of the self-evaluation in terms of F1 score, accuracy, precision and recall.

- View the confusion matrix for a recommender

-